Llama 3.1 8B vs Llama 3.2 3B – Which Meta Model Is Better?

The Meta Llama family has grown as a benchmark for open-source AI. Llama 3.1 8B and Llama 3.2 3B both models stand out as top choices. For developers and AI enthusiasts, for basic AI tools Intigration, and Normal uses Like Developing hobby projects and AI Powerd Tools at Low Cost. Choosing the right model can make a big difference in how well it works how much it costs, and how it scales. This comparison aims to help you figure out which model fits your needs best.

Quick Comparison Table

| Feature | LLaMA 3.1 8B | LLaMA 3.2 3B |

|---|---|---|

| Parameters | 8 Billion | 3 Billion |

| Architecture | Decoder-only Transformer | Decoder-only Transformer |

| Release Date | March 2025 | May 2025 |

| Instruction-Tuned Variant | Available | Available |

| Open-Source License | Meta Research License | Meta Research License |

| Optimized for | Coding, Chat, Summarization | Light tasks, Mobile use |

| Training Tokens | 15 Trillion | 8 Trillion |

| Memory Requirements | 14 GB VRAM | 6 GB VRAM |

| Benchmark Support | Yes | Partial |

Introduction to Both Models:

🔹 Llama 3.1 8B Overview:

The Llama 3.1 8B is a great balance between power and Cost. It’s suitable for a wide range of tasks such as Basic text generation, reasoning, summarization, and programming assistance. With significant improvements over Llama 2, it has become a favorite among developers looking for high performance without relying on 70B-scale models at Affordable cost.

🔹 Llama 3.2 3B Overview

The newer Llama 3.2 3B is designed for lightweight environments. It excels in fast inference, and minimal memory usage, and is particularly useful for smaller-scale applications or low-end devices and Basic tools integrations. Meta introduced this model to meet the growing need for deployable, resource-efficient AI tools.

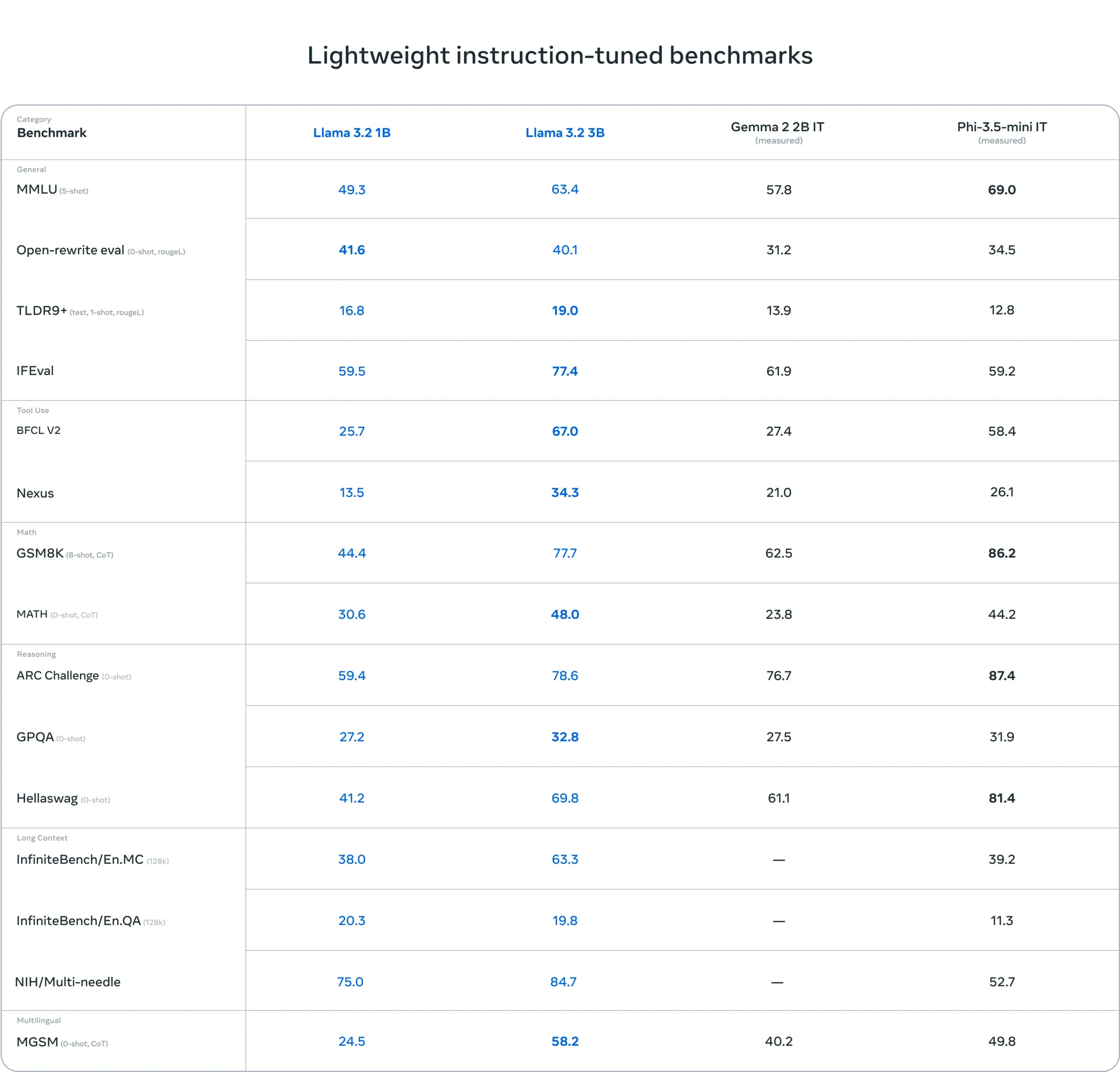

Performance Benchmarks:

Benchmarks are the pillar for evaluating any language model, especially when assessing real-world performance. Below are performance stats based on publicly available and community-reported results.

Note: All Details Verified from the Official Meta Website Mention I Also Share All original benchmarks Images by Meta You can Check on the official Website here is Llama 3.1 8B Official Benchmarks, and Hare is Llama 3.2 3B Official Benchmarks.

🔹 Llama 3.1 8B Official Benchmarks:

MMLU (Massive Multitask Language Understanding)

- Llama 3.1 8B: ~73%

- Llama 3.2 3B: ~63.4%

HumanEval (Coding Ability)

- Llama 3.1 8B: ~72.6%

- Llama 3.2 3B: ~N/A

ARC-Challenge (Reasoning)

- Llama 3.1 8B: 83.4%

- Llama 3.2 3B: 59.4%

GSM8K (Math Reasoning)

- Llama 3.1 8B: 84.5%

- Llama 3.2 3B: 44.4%

🔹 Llama 3.2 3B Official Benchmarks:

Use Case Performance:

💬 Chat Applications:

Llama 3.1 8B delivers small and to-the-point responses and maintains context effectively, making it ideal for Basic to Medium chatbot applications that require contextual memory and emotional tone. On the other hand, Llama 3.2 3B, while faster, loses context in longer conversations, making it more suitable for FAQ bots or transactional conversations.

💻 Coding Tasks:

Llama 3.1 8B performs considerably better on programming tasks like Python and JavaScript. It understands complex function calls, error handling, and logical steps while Llama 3.2 3B is serviceable for basic snippets and simple logic.

📈 Summarization:

Thanks to its larger context window and token efficiency, Llama 3.1 8B produces more accurate and fluent summaries. The 3.2 3B version performs well for short-form summarization or single-paragraph extraction but lacks consistency in longer documents.

Efficiency & Deployment:

If you deploying a model on limited hardware Like your Dual Core Pc or Locally Host, LLaMA 3.2 3B is a fantastic choice with low VRAM usage and fast response. LLaMA 3.1 8B, while heavier, offers superior results if you can afford the resources.

| Deployment Need | Best Option |

| Mobile / Edge devices | Llama 3.2 3B |

| Local PC (mid-GPU) | Llama 3.2 3B |

| Workstation / Cloud | Llama 3.1 8B |

In cloud environments like AWS, Azure, or Google Cloud, Llama 3.1 8B is ideal for serving Basic or Mid-level applications. It scales well across multiple GPUs, supports batching, and delivers consistent results.

Community Support & Ecosystem:

Both models are available on Hugging Face and supported by the open-source community. However, due to its popularity, Llama 3.1 8B has more pre-trained variants and fine-tuning tutorials. As of mid-2025, over 3,000 repositories reference Llama 3.1 8B, compared to around 800 for Llama 3.2 3B.

This community adoption matters if you plan to build on top of existing work, experiment with fine-tuning, or look for multi-lingual support packages.

Pros & Cons:

🔹 Llama 3.1 8B:

Pros:

- Higher performance across tasks

- Better at multitasking and reasoning

- Stronger benchmark scores

- Large developer support

Cons:

- High memory usage

- Slower inference on low-end devices

- Requires advanced deployment setup

🔹 Llama 3.2 3B:

Pros:

- Very efficient for local testing and demos

- Faster response time

- Good entry point for LLM development

- Works well on limited hardware

Cons:

- Lacks depth in logic-based tasks

- Fewer community resources

- Struggles with large context prompts

Who Should Use Which Model?

Choosing between these two depends on your goals:

- Developers: If you’re coding or building AI apps, go with Llama 3.1 8B, you can also use Llama Coder for Best Performance.

- Students: On a local device with limited GPU? Use Llama 3.2 3B.

- Startup Founders: Want lightweight integration in mobile/web apps? Llama 3.2 3B is perfect.

- Researchers: For serious benchmarking or fine-tuning, Llama 3.1 8B is a clear winner.

- Educators: Llama 3.2 3B works well for classroom demonstrations and student projects.

Final Verdict:

If you want raw power, better benchmarks, and support for complex reasoning or coding tasks, Llama 3.1 8B is the clear winner. It is better suited for research-grade experiments and production-level deployment.

However, if you’re limited by hardware or building for fast inference and smaller tasks, Llama 3.2 3B is an excellent choice.

🌊 Want more comparisons like this? Explore RankLLMs.com for weekly leaderboard updates, LLM rankings, and expert guides.