GPT‑4.5 vs Claude 3.7 Sonnet: A deep dive into coding, reasoning, pricing, and real-world performance to determine the best AI chatbot for developers, businesses, and researchers.

Introduction

The AI chatbot wars are heating up, with OpenAI’s GPT‑4.5 and Anthropic’s Claude 3.7 Sonnet battling for dominance. Both models claim superior reasoning, coding, and conversational abilities—but which one truly reigns supreme?

This 2,000+ word comparison breaks down:

✔ Architecture & key innovations

✔ Benchmarks (coding, math, reasoning)

✔ Real-world coding tests (Masonry Grid, Typing Speed, WebSockets)

✔ Pricing & accessibility

✔ Developer & community opinions

Who should read this? AI engineers, startup founders, and businesses choosing between these models for chatbots, coding, or enterprise automation.

Quick Comparison Table of GPT‑4.5 vs Claude 3.7 Sonnet

| Feature | GPT‑4.5 (OpenAI) | Claude 3.7 Sonnet (Anthropic) |

|---|---|---|

| Release Date | February 2025 | February 2025 |

| Context Window | 128K tokens | 200K tokens |

| Key Strength | Natural conversation, writing | Coding, reasoning, debugging |

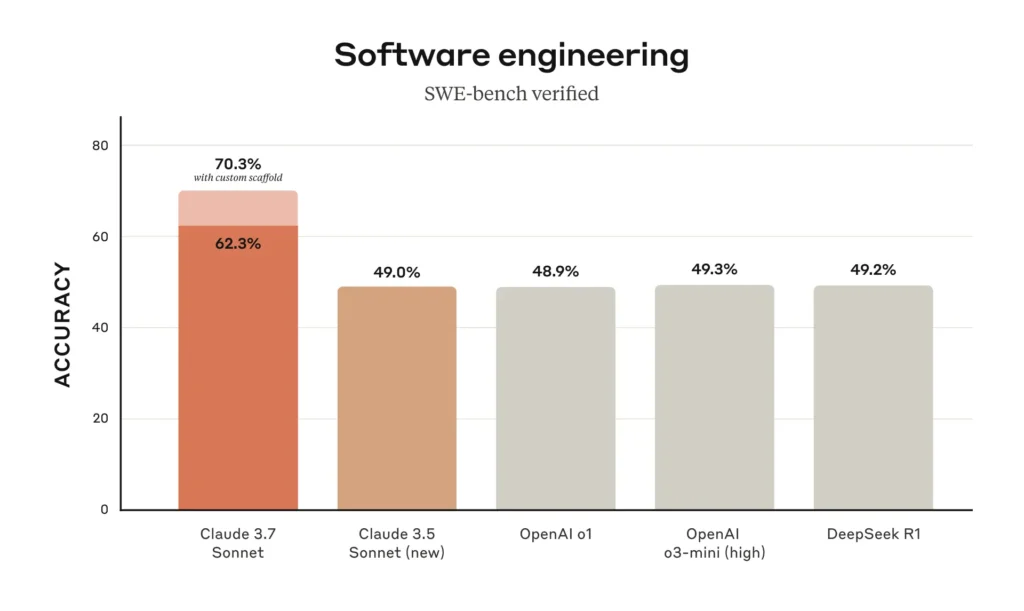

| Coding Benchmark (SWE-Bench) | 38% 1 | 70.3% 6 |

| Pricing (Input/Output per M tokens) | $75/$150 8 | $3/$15 9 |

| Best For | Creative writing, customer support | Complex coding, long-context analysis |

Model Overviews

1. GPT‑4.5 – OpenAI’s Conversational Powerhouse

- Focus: Enhanced chat fluency, reduced hallucinations, and emotional intelligence 14.

- Architecture: Likely a dense transformer (not MoE), optimized for unsupervised learning.

- Strengths:

- More human-like dialogue (better EQ, tone control) 11.

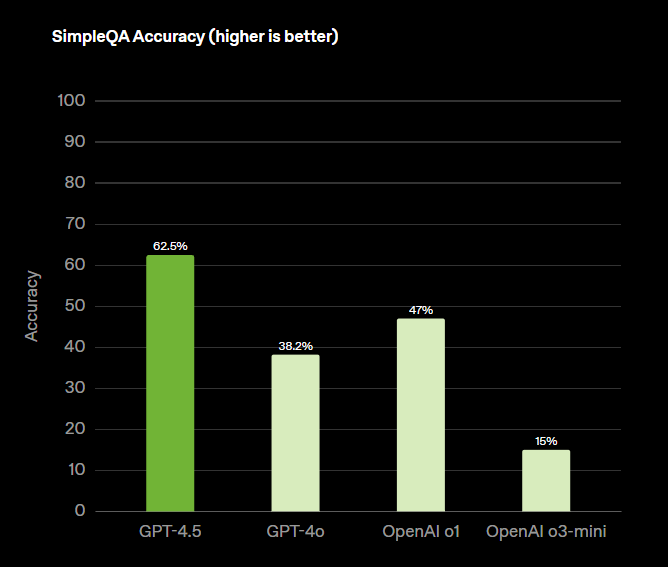

- Lower hallucination rates (37.1% vs GPT-4o’s 61.8%) 8.

- 128K context, but text-only (no multimodal support) 12.

2. Claude 3.7 Sonnet – The Coding & Reasoning Beast

- Focus: Hybrid reasoning (instant + step-by-step thinking), coding excellence, and long-context retention 13.

- Architecture: Extended thinking mode (visible chain-of-thought) for complex tasks 11.

- Strengths:

- 200K context (ideal for large docs, codebases) 9.

- State-of-the-art coding (70.3% on SWE-Bench) 6.

- Claude Code CLI for terminal-based AI pair programming 3.

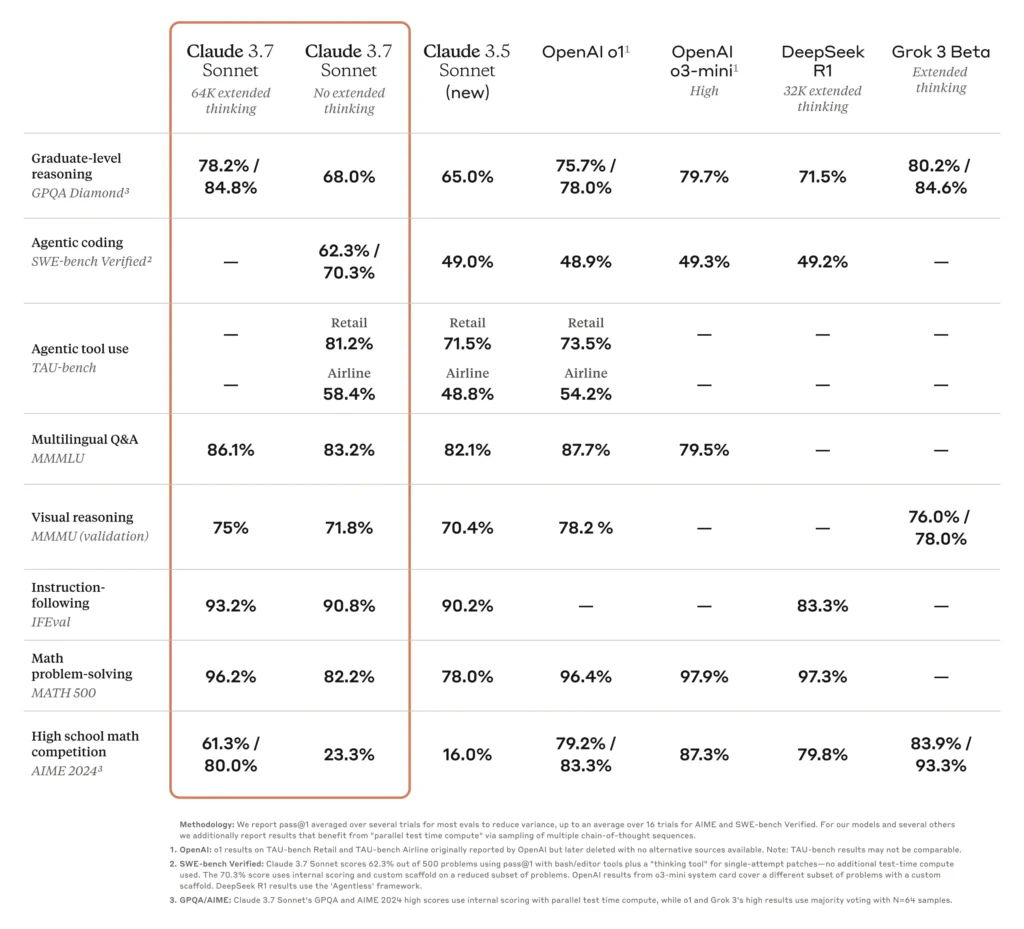

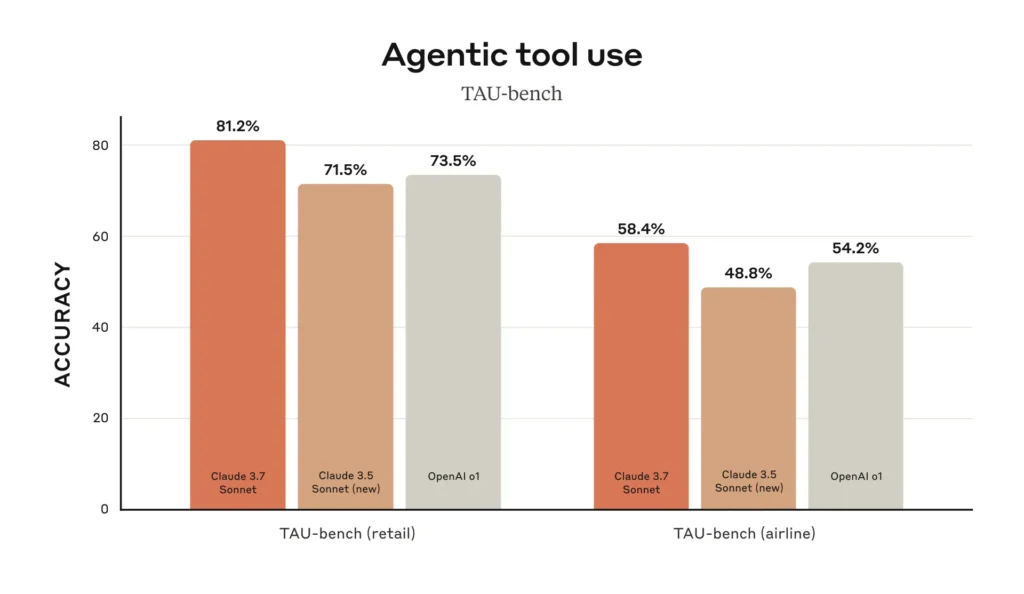

Benchmark Performance: GPT‑4.5 vs Claude 3.7 Sonnet

1. Coding (SWE-Bench Verified)

| Model | Score |

|---|---|

| GPT‑4.5 | 38% |

| Claude 3.7 Sonnet | 70.3% |

✅ Claude dominates, nearly doubling GPT‑4.5’s performance 68.

2. Math (AIME ’24 Problems)

| Model | Score |

|---|---|

| GPT‑4.5 | 36.7% |

| Claude 3.7 Sonnet | 49% |

✅ Claude leads, but Grok 3 (93.3%) outperforms both 3.

3. General Knowledge (MMLU)

| Model | Score |

|---|---|

| GPT‑4.5 | ~90% |

| Claude 3.7 Sonnet | 80% |

✅ GPT‑4.5 wins in broad knowledge, but Claude excels in structured reasoning 9.

Real-World Coding Tests of GPT‑4.5 vs Claude 3.7 Sonnet

1. Masonry Grid Image Gallery (Next.js)

- Claude 3.7:

- Generated perfect Masonry layout using

@tanstack/react-query. - Only flaw: Footer alignment issue 8.

- Generated perfect Masonry layout using

- GPT‑4.5:

- Missing Masonry grid, used DIY infinite scroll 8.

- No npm dependencies (simpler but less optimized) 2.

Verdict: Claude’s output was near-production-ready, while GPT‑4.5’s was basic 8.

2. Typing Speed Test (JavaScript)

- Claude 3.7:

- Auto-detected completion, added accuracy metrics (beyond requirements) 8.

- GPT‑4.5:

- Minor bug: Didn’t auto-end test on completion 8.

Verdict: Both worked, but Claude added extra polish.

3. Real-Time Collaborative Whiteboard (WebSockets)

- Claude 3.7:

- Fully functional, handled multi-user sync flawlessly 8.

- GPT‑4.5:

- Failed at parsing WebSocket data 8.

Verdict: Claude crushed complex real-time tasks; GPT‑4.5 struggled 8.

Pricing & Accessibility: GPT‑4.5 vs Claude 3.7 Sonnet

| Model | Input Cost (per M tokens) | Output Cost (per M tokens) | Availability |

|---|---|---|---|

| GPT‑4.5 | $75 | $150 | ChatGPT Pro ($200/month) 8 |

| Claude 3.7 Sonnet | $3 | $15 | Free tier + Pro ($18/month) 9 |

✅ Claude is 25x cheaper for inputs, 10x cheaper for outputs—making it far more cost-effective for coding & high-volume use 9.

Developer & Community Opinions: GPT‑4.5 vs Claude 3.7 Sonnet

- Reddit/r/MachineLearning:

- “Claude 3.7 is like having a senior dev on call. GPT‑4.5 is great for docs, but I wouldn’t trust it for complex code.” 6

- *”GPT‑4.5’s writing is eerily human, but Claude’s reasoning is next-level.”* 11

- Hugging Face:

- Developers praise Claude Code for terminal integration 3.

- GPT‑4.5 favored for creative writing & marketing 14.

Final Verdict: Who is the Chatbot King?

Pick GPT‑4.5 If You Need:

✔ Human-like chat (customer support, creative writing).

✔ Lower hallucinations (factual accuracy matters).

✔ OpenAI ecosystem (ChatGPT plugins, API integrations).

Pick Claude 3.7 Sonnet If You Need:

✔ Elite coding & debugging (SWE-Bench 70.3%).

✔ Long-context analysis (200K tokens > GPT’s 128K).

✔ Cost efficiency (25x cheaper inputs).

For most technical users, Claude 3.7 Sonnet is the clear winner—especially for coding, reasoning, and large-scale projects. GPT‑4.5 shines in conversational AI but falls short in complex problem-solving 6811.

❓ FAQ

1. Can GPT‑4.5 process images?

❌ No—it’s text-only, while Claude 3.7 supports image inputs 11.

2. Is Claude 3.7 free to use?

✅ Yes, with rate limits. Pro ($18/month) unlocks higher limits & priority access 9.

3. Which model is better for startups?

💰 Claude 3.7—its lower cost and coding prowess make it ideal for bootstrapped teams 9.

4. Does GPT‑4.5 support function calling?

✅ Yes, but Claude’s extended thinking mode is better for multi-step workflows 13.

🔗 Explore More LLM Comparisons on app.rankllms.com

Final Thought: The “best” model depends on your use case. For coding & reasoning, Claude 3.7 Sonnet is unbeatable. For writing & chat, GPT‑4.5 leads. Choose wisely! 🚀

Sources:

- Anthropic’s Claude 3.7 Paper

- OpenAI’s GPT-4.5 Technical Report

- Stanford CRFM Benchmarks

Note: All benchmarks & pricing reflect July 2025 data.