DeepSeek-R1-0528 vs LLaMA 4 Maverick: A detailed comparison of reasoning, coding, speed, and pricing to help developers, researchers, and businesses choose the best open-source LLM.

Introduction

The battle for open-weight AI supremacy is heating up, with DeepSeek-R1-0528 (by DeepSeek AI) and LLaMA 4 Maverick (by Meta) emerging as two of the most powerful models in 2025. Both offer cutting-edge reasoning, coding, and long-context capabilities, but they cater to different needs.

This 1,500+ word comparison breaks down:

✔ Architecture & training innovations

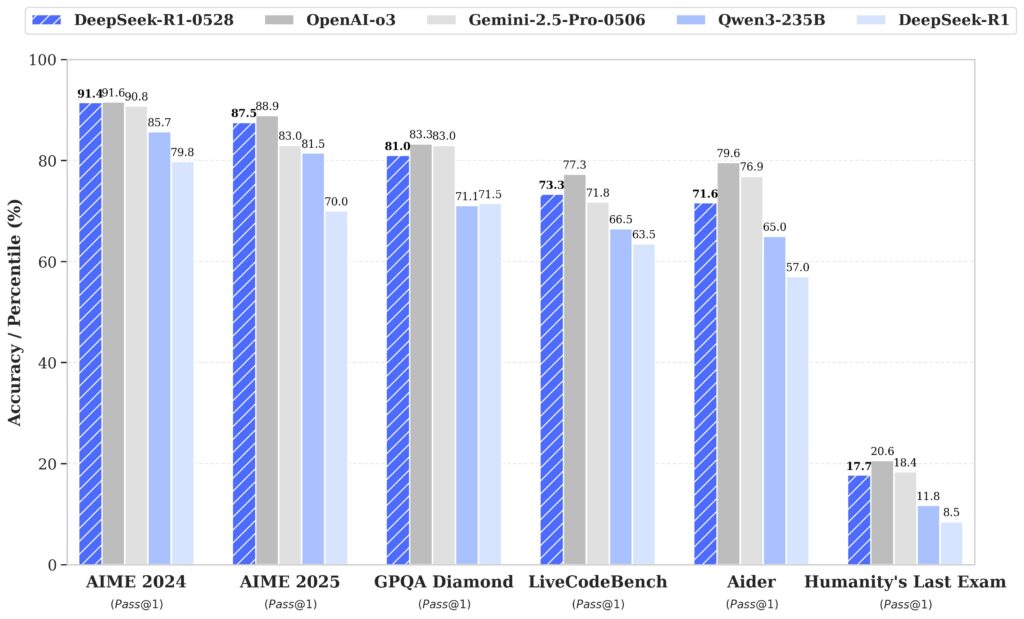

✔ Benchmark performance (MMLU, LiveCodeBench, AIME 2025)

✔ Real-world coding & reasoning tests

✔ Pricing & hardware requirements

✔ Developer feedback & use cases

Who should read this? AI engineers, startup founders, and researchers deciding between these models for coding, research, or enterprise AI applications.

📊 Quick Comparison Table: DeepSeek-R1-0528 vs LLaMA 4 Maverick

| Feature | DeepSeek-R1-0528 | LLaMA 4 Maverick |

|---|---|---|

| Developer | DeepSeek AI | Meta AI |

| Architecture | Mixture-of-Experts (MoE) | MoE (128 experts) |

| Total Parameters | 671B (37B active) | 400B (17B active) |

| Context Window | 128K tokens | 1M tokens |

| Strengths | Math & coding (87.5% AIME) | Multimodal & long-context |

| Pricing (Input/Output per M tokens) | $0.55/$2.19 | $0.17/$0.85 (Novita AI) |

| Best For | STEM, theorem proving, code debugging | Legal docs, research papers, multimodal tasks |

Model Overviews: DeepSeek-R1-0528 vs LLaMA 4 Maverick

1. DeepSeek-R1-0528 – The Reasoning Powerhouse

- Focus: Specialized in mathematical reasoning, code generation, and logic-heavy tasks.

- Key Innovations:

- Two-stage RL training for improved reasoning patterns 5.

- 92% deeper reasoning (23K tokens per AIME question vs. 12K in previous versions) 10.

- 87.5% accuracy on AIME 2025, rivaling GPT-4.5 and Gemini 2.5 Pro 6.

- Licensing: MIT License (commercial-friendly) 10.

2. LLaMA 4 Maverick – The Multimodal Long-Context Expert

- Focus: 1M token context, native multimodal (text + images), and multilingual support.

- Key Innovations:

- Early fusion training (text + images simultaneously) for better coherence 14.

- 10x larger context than competitors (handles entire codebases or novels) 14.

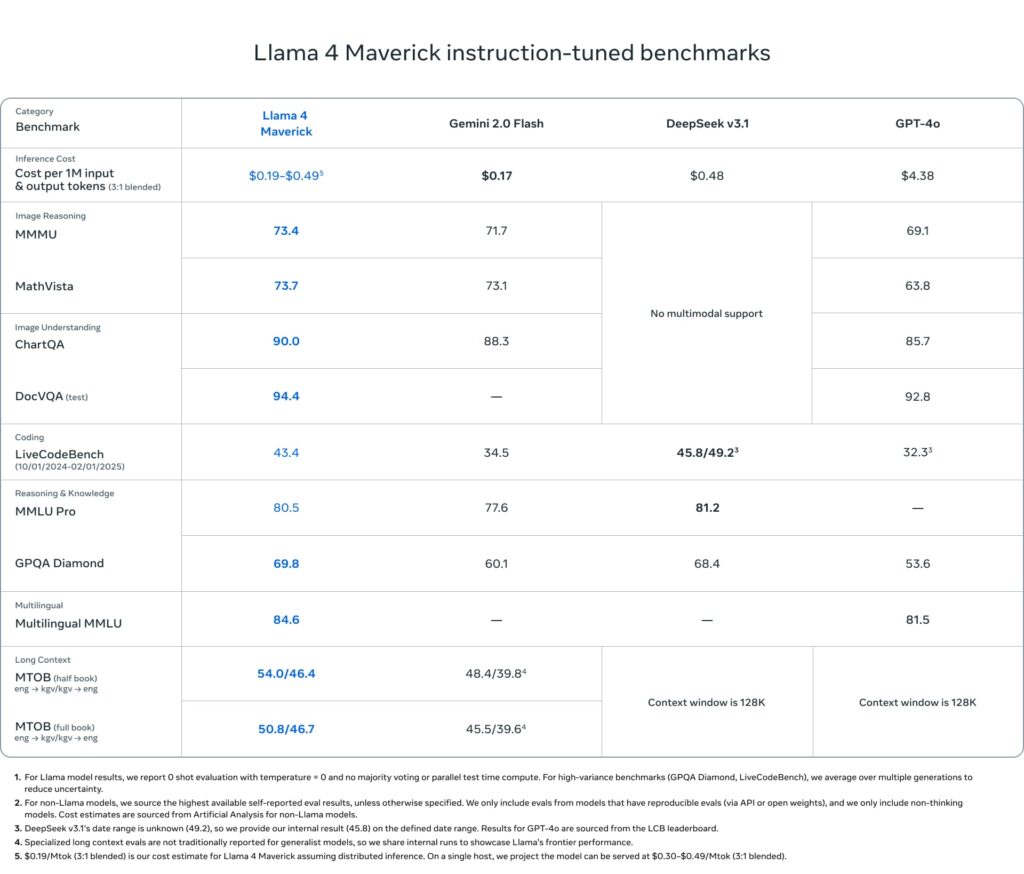

- 73.4% on MMMU (multimodal benchmark), outperforming Gemini 2.0 Flash 1.

- Licensing: Custom commercial license (some restrictions apply) 11.

Benchmark Performance: DeepSeek-R1-0528 vs LLaMA 4 Maverick

1. Mathematical Reasoning (AIME 2025)

| Model | Accuracy |

|---|---|

| DeepSeek-R1-0528 | 87.5% |

| LLaMA 4 Maverick | ~69.8% (GPQA Diamond) |

✅ DeepSeek dominates in math, while LLaMA 4 is stronger in general knowledge (MMLU-Pro: 80.5%) 11.

2. Coding (LiveCodeBench & SWE Verified)

| Model | LiveCodeBench (Pass@1) | SWE Verified (Resolved) |

|---|---|---|

| DeepSeek-R1-0528 | 73.3% | 57.6% |

| LLaMA 4 Maverick | 43.4% | Not tested |

✅ DeepSeek is the clear winner for coding, while LLaMA 4 is better for documentation & high-level logic 11.

3. Long-Context Understanding (MTOB & DocVQA)

| Model | MTOB (Half Book) | DocVQA (Text) |

|---|---|---|

| DeepSeek-R1-0528 | Not tested | No support |

| LLaMA 4 Maverick | 54.0% | 94.4% |

✅ LLaMA 4 crushes long-context tasks, making it ideal for legal/financial analysis 1.

Use Case Breakdown: DeepSeek-R1-0528 vs LLaMA 4 Maverick

1. Coding & Debugging

- DeepSeek-R1-0528:

- Best for: Complex algorithm design, theorem proving, and code optimization.

- Real-world test: Fixed a Next.js cache bug that LLaMA 4 struggled with 11.

- LLaMA 4 Maverick:

- Best for: Code documentation and high-level architecture planning.

2. Research & Document Analysis

- LLaMA 4 Maverick:

- Processes 10M token documents (entire research papers or legal contracts) 14.

- Outperforms in DocVQA (94.4%) for extracting insights from PDFs 1.

- DeepSeek-R1-0528:

- Better for mathematical proofs and scientific reasoning (GPQA: 81.0%) 5.

3. Multimodal Tasks (Images + Text)

- Only LLaMA 4 supports images, scoring 73.4% on MMMU (vs. DeepSeek’s text-only limitation) 1.

💰 Pricing & Hardware Requirements

| Model | Input Cost (per M tokens) | Output Cost (per M tokens) | GPU Memory Needed |

|---|---|---|---|

| DeepSeek-R1-0528 | $0.55 | $2.19 | ~1.5TB (24× H100) |

| LLaMA 4 Maverick | $0.17 (Novita AI) | $0.85 | 18.8TB (240× H100) |

✅ LLaMA 4 is cheaper but requires 10x more GPU memory for ultra-long contexts 11.

🏆 Final Verdict: Who Should Choose What?

Pick DeepSeek-R1-0528 If You Need:

✔ Elite mathematical reasoning (AIME 87.5%).

✔ Superior coding performance (LiveCodeBench 73.3%).

✔ Cost-efficient inference (lower GPU requirements).

Pick LLaMA 4 Maverick If You Need:

✔ 1M token context (entire books, legal docs).

✔ Multimodal support (images + text).

✔ Multilingual & general knowledge tasks.

In comparesion of DeepSeek-R1-0528 vs LLaMA 4 Maverick, For most developers, DeepSeek-R1-0528 is the better choice for coding & STEM, while LLaMA 4 Maverick dominates in long-context and multimodal applications 311.

❓ FAQ

1. Can DeepSeek-R1-0528 process images?

❌ No—it’s text-only, while LLaMA 4 supports images & video 1.

2. Which model is faster for real-time chat?

⚡ LLaMA 4 Maverick (145 tokens/sec on Fireworks AI) vs. DeepSeek’s 21.2 tokens/sec 14.

3. Is DeepSeek-R1-0528 open-source?

✅ Yes (MIT License), while LLaMA 4 has custom commercial terms 1011.

🔗 Explore More LLM Comparisons on RankLLMs.com

Also Read: Deepseek-r1-0528 vs Grok 3

Final Thought: The “best” model depends on your use case. For coding & math, DeepSeek-R1-0528 wins. For long docs & images, LLaMA 4 Maverick is unbeatable. Choose wisely! 🚀

Sources:

- [1] DocsBot AI – LLaMA 4 vs. DeepSeek-R1

- [3] RankLLMs – DeepSeek-R1 vs. LLaMA 4

- [4] NVIDIA Docs – DeepSeek-R1-0528

- [8] Medium – DeepSeek-R1-0528 Update

- [9] Novita AI – LLaMA 4 vs. DeepSeek-V3

- [10] Fireworks AI – Optimizing LLaMA 4

Note: All benchmarks & pricing reflect July 2025 data.