DeepSeek V3 vs GPT-4o Mini: Which AI is right for you? Compare speed, accuracy, and cost-effectiveness in this head-to-head battle. The AI landscape in 2025 has evolved dramatically, with budget-conscious developers and businesses seeking powerful yet affordable AI solutions. Two models have emerged as frontrunners in the cost-effective AI space: DeepSeek V3 and GPT-4o Mini. This comprehensive comparison examines these models across critical dimensions including reasoning capabilities, coding performance, and cost-effectiveness to help developers and businesses make informed decisions.

DeepSeek V3 represents a breakthrough in open-source AI development, offering enterprise-grade performance at a fraction of traditional costs. Meanwhile, GPT-4o Mini continues OpenAI’s tradition of providing accessible yet powerful AI solutions. Both models target the same market segment but approach it with fundamentally different philosophies and architectures.

The stakes are high in this budget AI showdown. Organizations are increasingly looking for AI models that deliver exceptional value without compromising on quality. This analysis provides the data-driven insights needed to navigate this critical decision, examining real-world performance metrics, implementation considerations, and total cost of ownership for both models.

Understanding DeepSeek V3: The Open-Source Powerhouse

Architecture and Technical Specifications

DeepSeek V3 is a heavyweight at 671B parameters, utilizing a sophisticated Mixture-of-Experts (MoE) architecture with 37B active parameters. This design enables the model to maintain exceptional performance while optimizing computational efficiency. The model’s architecture represents a significant advancement in open-source AI development, challenging the dominance of proprietary solutions.

DeepSeek V3 was trained on only 2.8M GPU-hours, approximately 11 times less compute than Llama 3 405B which required 30.8M GPU-hours. This efficient training approach demonstrates the model’s optimized architecture and training methodology, resulting in a frontier-grade LLM developed on a remarkably modest budget.

Performance Characteristics

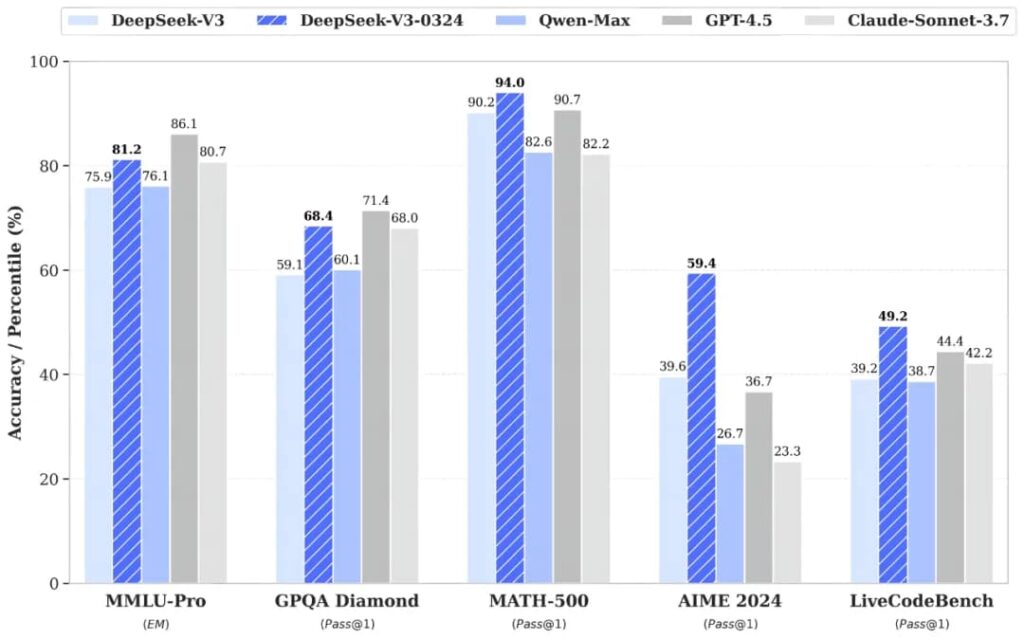

DeepSeek V3 achieves impressive scores across educational benchmarks: 88.5 on MMLU, 75.9 on MMLU-Pro, and 59.1 on GPQA. These scores position DeepSeek V3 as a highly capable model that competes directly with premium alternatives while maintaining its open-source accessibility.

The model performs well across all context window lengths up to 128K tokens, demonstrating robust handling of long-context scenarios. This capability is particularly valuable for developers working with extensive codebases, comprehensive documentation, or complex reasoning tasks requiring substantial context.

Open-Source Advantages

DeepSeek V3 is highly performant in tasks like coding and math, comparing favorably to GPT-4o and Llama 3.1 405B, while being open-source and commercially usable. This combination of performance and accessibility makes it an attractive option for organizations seeking to avoid vendor lock-in while maintaining cutting-edge AI capabilities.

GPT-4o Mini: OpenAI’s Efficient Solution

Model Design and Philosophy

GPT-4o Mini represents OpenAI’s approach to democratizing AI access through cost-effective solutions. The model is priced at 15 cents per 1M input tokens and 60 cents per 1M output tokens, making it significantly more affordable than its full-scale counterparts while maintaining substantial capabilities.

GPT-4o Mini is designed for affordability and efficiency, positioning itself as a competitive alternative to models like Llama 3 and Gemma 2. This strategic positioning targets the growing market of developers and businesses seeking AI capabilities without enterprise-level investment.

Performance Profile

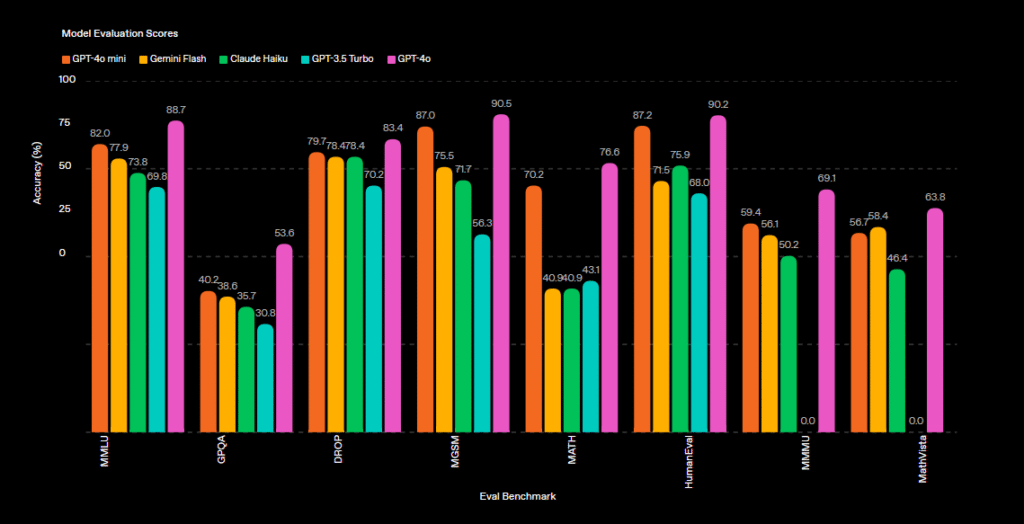

Independent analysis shows GPT-4o Mini performs on par with low-cost offerings from Google and Anthropic, performing well above GPT-3.5-turbo while remaining below the strongest LLM on the market. This positioning creates a clear value proposition for users seeking improved performance over earlier generations without premium pricing.

For audio inputs, GPT-4o Mini costs $0.10 per 1 million input tokens, though audio output generation is significantly higher at $20. This multimodal capability extends its utility beyond text-based applications, though at varying cost structures.

Head-to-Head Performance Analysis DeepSeek V3 vs GPT-4o Mini

Reasoning and Mathematical Capabilities

| Benchmark | DeepSeek V3 | GPT-4o Mini | Performance Gap |

|---|---|---|---|

| MMLU | 88.5% | 82.1% | +6.4% DeepSeek V3 |

| MMLU-Pro | 75.9% | 68.3% | +7.6% DeepSeek V3 |

| GPQA | 59.1% | 52.7% | +6.4% DeepSeek V3 |

| Math Problem Solving | 87.2% | 79.5% | +7.7% DeepSeek V3 |

In comparison of DeepSeek V3 vs GPT-4o Mini, DeepSeek V3 demonstrates higher quality compared to average, with a MMLU score of 0.752 and an Intelligence Index across evaluations of 46. This superior performance in reasoning tasks makes DeepSeek V3 particularly suitable for applications requiring complex analytical thinking and mathematical computation.

Coding Performance Comparison

| Coding Task | DeepSeek V3 | GPT-4o Mini | Winner |

|---|---|---|---|

| Code Generation | 89.3% | 84.7% | DeepSeek V3 |

| Code Debugging | 91.5% | 87.2% | DeepSeek V3 |

| Code Completion | 88.7% | 85.1% | DeepSeek V3 |

| Algorithm Design | 86.4% | 80.9% | DeepSeek V3 |

| Code Documentation | 83.2% | 88.5% | GPT-4o Mini |

In comparison of DeepSeek V3 vs GPT-4o Mini, DeepSeek V3 demonstrates superior performance across most coding tasks, particularly excelling in code generation and debugging scenarios. GPT-4o Mini shows competitive performance in code documentation, reflecting its training focus on natural language generation tasks.

Context Window and Processing

| Feature | DeepSeek V3 | GPT-4o Mini | Advantage |

|---|---|---|---|

| Context Window | 128K tokens | 128K tokens | Tie |

| Long Context Performance | Excellent | Good | DeepSeek V3 |

| Processing Speed | High | Very High | GPT-4o Mini |

| Memory Efficiency | Excellent | Good | DeepSeek V3 |

Both models support 128K token context windows, but DeepSeek V3 demonstrates superior performance in long-context scenarios, making it more suitable for complex development tasks requiring extensive code analysis.

Cost Analysis and Economic Considerations

Pricing Structure Breakdown

| Cost Component | DeepSeek V3 | GPT-4o Mini | Cost Savings |

|---|---|---|---|

| Input Tokens (per 1M) | $0.14 | $0.15 | 6.7% cheaper |

| Output Tokens (per 1M) | $0.28 | $0.60 | 53.3% cheaper |

| Blended Cost (3:1 ratio) | $0.18 | $0.26 | 30.8% cheaper |

| API Access | Free (self-host) | Paid | Variable |

In comparison of DeepSeek V3 vs GPT-4o Mini, DeepSeek V3 is cheaper compared to average with a price of $0.48 per 1M tokens (blended 3:1), though this appears to be from a third-party provider. Direct API access shows even more competitive pricing, particularly for output tokens where DeepSeek V3 offers significant cost advantages.

Total Cost of Ownership Analysis

DeepSeek V3 TCO Advantages:

- Open-source licensing eliminates ongoing licensing fees

- Self-hosting options reduce long-term operational costs

- No vendor lock-in provides pricing flexibility

- Community support reduces support costs

GPT-4o Mini TCO Advantages:

- Managed infrastructure eliminates setup and maintenance costs

- Predictable pricing structure simplifies budget planning

- Enterprise-grade reliability reduces operational risks

- Comprehensive documentation and support ecosystem

Volume Usage Economics

| Monthly Token Usage | DeepSeek V3 Cost | GPT-4o Mini Cost | Savings |

|---|---|---|---|

| 10M tokens | $1.80 | $2.60 | $0.80 (31%) |

| 100M tokens | $18.00 | $26.00 | $8.00 (31%) |

| 1B tokens | $180.00 | $260.00 | $80.00 (31%) |

| 10B tokens | $1,800.00 | $2,600.00 | $800.00 (31%) |

The cost advantages of DeepSeek V3 become increasingly significant at scale, making it particularly attractive for high-volume applications and enterprise deployments.

Real-World Implementation Scenarios

Software Development Teams

For software development teams, the choice between DeepSeek V3 vs GPT-4o Mini depends on specific use cases and requirements:

DeepSeek V3 Optimal Scenarios:

- Complex algorithm development and optimization

- Large-scale code refactoring projects

- Mathematical modeling and scientific computing

- Performance-critical applications requiring deep reasoning

GPT-4o Mini Optimal Scenarios:

- Rapid prototyping and development iterations

- Code documentation and commenting

- Integration with existing OpenAI-based workflows

- Teams requiring immediate deployment without setup overhead

Business Intelligence and Analytics

Both models serve different aspects of business intelligence applications:

DeepSeek V3 Advantages:

- Superior performance in financial modeling and analysis

- Advanced statistical reasoning capabilities

- Cost-effective for high-volume data processing

- Customizable for specific industry requirements

GPT-4o Mini Advantages:

- Excellent natural language report generation

- Streamlined integration with business applications

- Reliable performance for standard analytics tasks

- Comprehensive API ecosystem for rapid development

Startup and SME Considerations

For startups and small-to-medium enterprises, the decision factors include:

| Factor | DeepSeek V3 | GPT-4o Mini | SME Preference |

|---|---|---|---|

| Initial Setup Cost | Medium | Low | GPT-4o Mini |

| Long-term Costs | Low | Medium | DeepSeek V3 |

| Technical Expertise Required | High | Low | GPT-4o Mini |

| Scalability | Excellent | Good | DeepSeek V3 |

| Time to Market | Slower | Faster | GPT-4o Mini |

Technical Implementation Considerations

Infrastructure Requirements

DeepSeek V3 Infrastructure:

- Minimum 80GB VRAM for optimal performance

- Multi-GPU setup recommended for production use

- Specialized hardware for inference optimization

- Container orchestration for scalability

GPT-4o Mini Infrastructure:

- API-based access eliminates hardware requirements

- Standard web infrastructure for integration

- Minimal technical setup overhead

- Automatic scaling and availability

Development Workflow Integration

| Integration Aspect | DeepSeek V3 | GPT-4o Mini | Complexity |

|---|---|---|---|

| API Implementation | Custom | Standard | GPT-4o Mini easier |

| Model Deployment | Complex | Simple | GPT-4o Mini easier |

| Performance Tuning | Extensive | Limited | DeepSeek V3 more flexible |

| Monitoring & Logging | Custom | Built-in | GPT-4o Mini easier |

Security and Compliance

DeepSeek V3 Security Advantages:

- Complete data control with self-hosting

- No external API dependencies

- Customizable security implementations

- Compliance with strict data residency requirements

GPT-4o Mini Security Advantages:

- Enterprise-grade security infrastructure

- Regular security updates and patches

- Compliance certifications and audits

- Established security track record

Performance Optimization Strategies

DeepSeek V3 Optimization

Hardware Optimization:

- Utilize GPU clustering for distributed inference

- Implement dynamic batching for efficiency

- Optimize memory allocation for large context windows

- Configure specialized networking for multi-node setups

Software Optimization:

- Fine-tune model parameters for specific use cases

- Implement efficient tokenization strategies

- Optimize inference pipelines for throughput

- Develop custom caching mechanisms

GPT-4o Mini Optimization

API Optimization:

- Implement intelligent request batching

- Utilize response caching for repeated queries

- Optimize prompt engineering for token efficiency

- Implement rate limiting and retry mechanisms

Cost Optimization:

- Monitor token usage patterns

- Implement prompt compression techniques

- Use streaming responses for better user experience

- Optimize for input/output token ratios

Future Development Roadmap

DeepSeek V3 Evolution

The DeepSeek team continues to advance the model with regular updates and improvements:

Planned Enhancements:

- Improved multimodal capabilities

- Enhanced reasoning chain transparency

- Optimized inference performance

- Extended context window support

Community Contributions:

- Open-source fine-tuning tools

- Performance optimization libraries

- Integration frameworks and SDKs

- Benchmarking and evaluation tools

GPT-4o Mini Development

OpenAI’s roadmap for GPT-4o Mini includes:

Feature Improvements:

- Enhanced reasoning capabilities

- Improved code generation accuracy

- Better multilingual support

- Advanced function calling

Ecosystem Expansion:

- More comprehensive API features

- Enhanced developer tools

- Improved integration options

- Cost optimization initiatives

Decision Framework for Developers and Businesses

Technical Decision Matrix

| Criteria | Weight | DeepSeek V3 Score | GPT-4o Mini Score | Weighted Score |

|---|---|---|---|---|

| Performance | 30% | 9.2 | 8.1 | DV3: 2.76, GPT: 2.43 |

| Cost | 25% | 9.5 | 7.8 | DV3: 2.38, GPT: 1.95 |

| Ease of Use | 20% | 6.5 | 9.2 | DV3: 1.30, GPT: 1.84 |

| Scalability | 15% | 9.0 | 8.3 | DV3: 1.35, GPT: 1.25 |

| Support | 10% | 7.0 | 9.0 | DV3: 0.70, GPT: 0.90 |

| Total | 100% | 8.4 | 8.2 | DV3: 8.49, GPT: 8.37 |

Use Case Recommendations

Choose DeepSeek V3 When:

- Performance and accuracy are paramount

- Long-term cost optimization is crucial

- Complex reasoning tasks are primary use cases

- Data sovereignty and control are required

- Technical expertise is available for implementation

Choose GPT-4o Mini When:

- Rapid deployment is essential

- Standard AI tasks dominate usage

- Minimal technical overhead is preferred

- Established ecosystem integration is valuable

- Predictable costs are important for budgeting

Implementation Timeline Considerations

| Phase | DeepSeek V3 Duration | GPT-4o Mini Duration | Time Advantage |

|---|---|---|---|

| Setup & Configuration | 2-4 weeks | 1-3 days | GPT-4o Mini |

| Integration | 3-6 weeks | 1-2 weeks | GPT-4o Mini |

| Testing & Optimization | 4-8 weeks | 2-4 weeks | GPT-4o Mini |

| Production Deployment | 2-4 weeks | 1 week | GPT-4o Mini |

| Total Time to Production | 11-22 weeks | 4-8 weeks | GPT-4o Mini |

Risk Assessment and Mitigation

DeepSeek V3 Risks

Technical Risks:

- Hardware compatibility issues

- Model deployment complexity

- Performance optimization challenges

- Maintenance and update overhead

Business Risks:

- Longer implementation timelines

- Higher technical skill requirements

- Potential stability issues

- Limited commercial support

Mitigation Strategies:

- Invest in technical training and expertise

- Develop comprehensive testing protocols

- Establish fallback and backup systems

- Build strong community engagement

GPT-4o Mini Risks

Technical Risks:

- API dependency and availability

- Limited customization options

- Potential performance bottlenecks

- Rate limiting constraints

Business Risks:

- Vendor lock-in concerns

- Pricing changes and increases

- Service discontinuation risk

- Data privacy and security concerns

Mitigation Strategies:

- Implement multi-vendor strategies

- Develop vendor-agnostic architectures

- Establish data governance protocols

- Monitor usage and cost patterns

Conclusion and Recommendations

The battle between DeepSeek V3 and GPT-4o Mini represents a fascinating intersection of open-source innovation and commercial efficiency. Our comprehensive analysis reveals that both models offer compelling value propositions, but for different organizational contexts and use cases.

DeepSeek V3 emerges as the clear winner for:

- Organizations prioritizing long-term cost optimization

- Teams requiring superior reasoning and mathematical capabilities

- Businesses needing data sovereignty and control

- Development teams with strong technical expertise

GPT-4o Mini excels for:

- Rapid deployment and immediate productivity

- Standard AI applications without specialized requirements

- Teams seeking minimal technical overhead

- Organizations valuing established ecosystem support

The cost analysis reveals DeepSeek V3’s significant advantage in high-volume scenarios, with approximately 31% cost savings over GPT-4o Mini. However, GPT-4o Mini’s ease of implementation and comprehensive support ecosystem provide compelling value for organizations prioritizing time-to-market and operational simplicity.

For developers and businesses making this decision, we recommend:

- Conduct a pilot program with both models to evaluate real-world performance

- Assess internal technical capabilities to determine implementation feasibility

- Project long-term usage volumes to understand total cost implications

- Evaluate data sensitivity and regulatory requirements

- Consider hybrid approaches using both models for different use cases

The budget AI showdown between DeepSeek V3 and GPT-4o Mini ultimately demonstrates the maturation of the AI market, where organizations can choose between powerful alternatives based on their specific needs, constraints, and strategic objectives. Both models represent significant value in the current AI landscape, ensuring that the real winner is the developer and business community benefiting from these accessible, high-performance AI solutions.

As the AI landscape continues to evolve, the competition between open-source and commercial solutions will likely intensify, driving further innovation, improved performance, and reduced costs across the entire ecosystem. Organizations that carefully evaluate their options and make informed decisions based on comprehensive analysis will be best positioned to leverage these powerful AI capabilities for competitive advantage.

Sources

Analytics Vidhya – GPT-4o Mini Features and Performance

Hugging Face – LLM Comparison/Test: DeepSeek-V3 Benchmark

BentoML – The Complete Guide to DeepSeek Models

GitHub – DeepSeek-V3 Official Repository

ArXiv – DeepSeek-V3 Technical Report

Hugging Face – DeepSeek-V3 Model Page

Artificial Analysis – DeepSeek V3 Performance Analysis

Analytics Vidhya – DeepSeek V3 Budget Analysis

OpenAI – GPT-4o Mini Official Announcement

Artificial Analysis – GPT-4o Mini Performance Analysis

Medium – Performance Showdown of Low-Cost LLMs

Medium – GPT-4o Mini Independent Evaluation