Anthropic’s new Claude Opus 4.1 vs GPT-5 AI coding model challenges GPT-5. This ultimate 2025 showdown compares benchmarks, API pricing, and enterprise use cases to see who wins.

Key Takeaways (GEO Box)

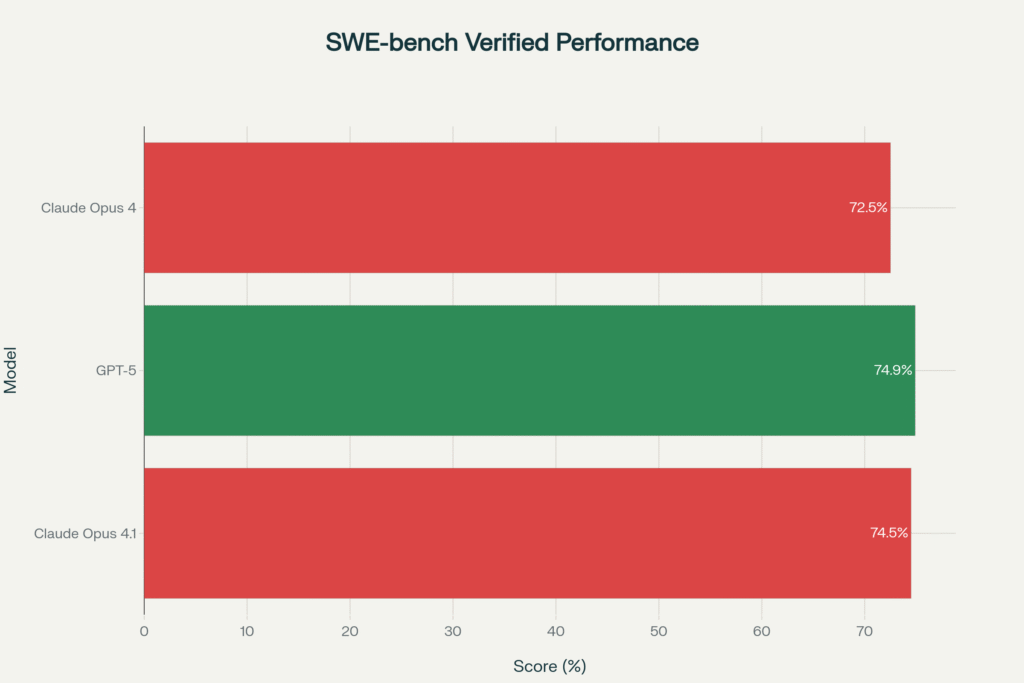

- Benchmark Battle: On the critical SWE-bench for coding, GPT-5 holds a razor-thin lead at 74.9%, while Claude Opus 4.1 is close behind at 74.5%. This places both at the peak of AI coding capabilities.

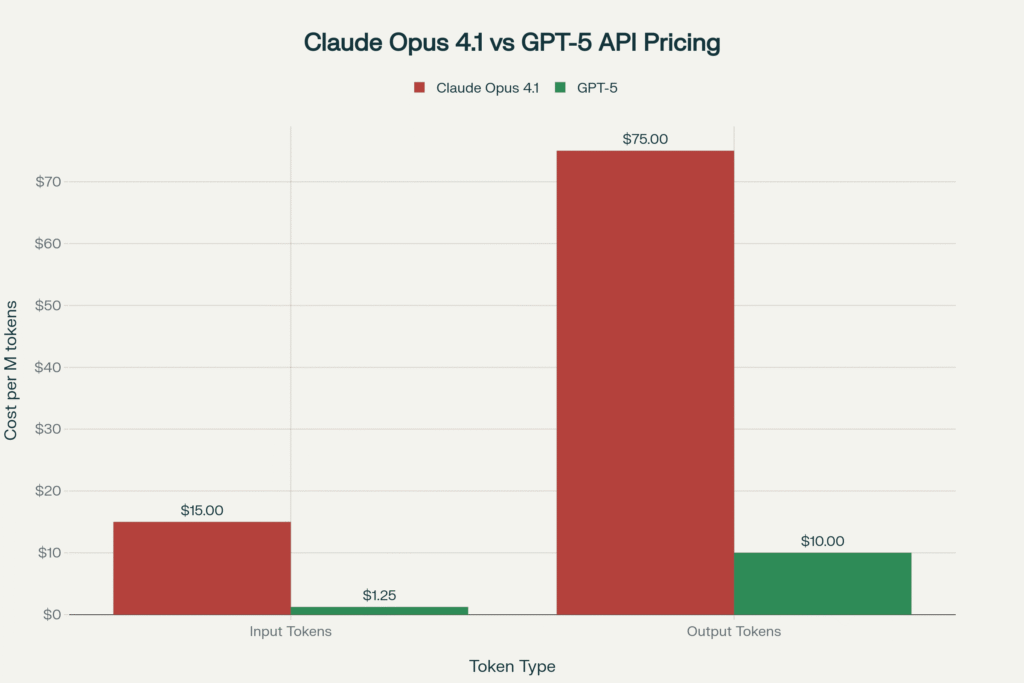

- Cost Divide: The most significant difference is price. Claude Opus 4.1 is a premium tool at $15 per million input and $75 per million output tokens. GPT-5 is aggressively priced for broad adoption at approximately $1.25 and $10 for the same, making it about 10-12 times cheaper.

- Strategic Focus: Anthropic positions Claude Opus 4.1 as a high-precision “scalpel” for complex, enterprise-grade coding and agentic tasks. OpenAI offers GPT-5 as a versatile and accessible “Swiss Army knife” for a wide range of users and general-purpose tasks.

- Enterprise Adoption: Anthropic has gained significant traction in the business world, capturing 32% of the enterprise AI market share compared to OpenAI’s 25%. In coding-specific tasks, Claude’s lead is even more pronounced at 42% versus 21%.

Introduction: The AI Coding Revolution

The artificial intelligence landscape of August 2025 is being forged in the fires of intense competition, not just for general intelligence, but for specialized, high-value applications. The software development lifecycle is being fundamentally transformed by AI, and two titans have emerged to define its future: Anthropic’s

Claude Opus 4.1 and OpenAI’s GPT-5.

The near-simultaneous releases—Claude on August 5, 2025, and GPT-5 just two days later—have set the stage for an unprecedented rivalry. However, this isn’t a simple race to the top of the leaderboards. It represents a strategic divergence in philosophy. The

Claude 4.1 vs GPT-5 contest showcases two distinct paths: Anthropic is doubling down on precision, safety, and reliability for enterprise AI, while OpenAI focuses on democratizing cutting-edge AI through versatility and aggressive pricing. For developers, enterprises, and researchers, understanding this divide is critical to making the right strategic investment in AI.

Launch Details: Availability & Platforms

Anthropic ensured its latest model was immediately accessible to its core user base upon its August 5, 2025, release, leveraging a multi-channel distribution strategy to penetrate deep into enterprise workflows. GPT-5 followed suit on August 7, 2025, with a widespread release across its massive ecosystem.

Claude Opus 4.1 is available via:

- The Claude.ai web interface and mobile apps for all paying subscribers (Pro, Max, Team, and Enterprise).

- The Anthropic Developer API, where upgrading is as simple as changing the model string.

- Claude Code, a dedicated command-line interface (CLI) tool for development workflows.

- Major cloud platforms, including Amazon Bedrock and Google Cloud’s Vertex AI.

- A public preview for GitHub Copilot users on Enterprise and Pro+ plans.

GPT-5 was rolled out across:

- The OpenAI API, with multiple variants to suit different needs.

- GitHub Copilot and other Microsoft platforms like Azure AI Foundry.

- ChatGPT, where it serves as the new default model for both free and paid tiers.

This strategic availability, particularly on platforms like

Bedrock and Vertex AI, allows enterprises to easily integrate Claude into their existing cloud infrastructure and billing cycles, creating a competitive moat based on convenience and performance.

| Platform | Claude Opus 4.1 Availability | GPT-5 Availability | ||

| Direct Web Access | Claude.ai (Paid Plans) | ChatGPT (Free & Paid) | ||

| Developer API | Anthropic API | OpenAI API | ||

| Cloud Marketplaces | Amazon Bedrock, Google Vertex AI | Microsoft Azure AI Foundry | ||

| Developer Tools | Claude Code, GitHub Copilot (Preview) | GitHub Copilot |

Key Improvements in Claude Opus 4.1

Claude Opus 4.1 is not a radical overhaul but a focused, incremental upgrade that sharpens its capabilities for professional users. The improvements center on coding accuracy, reasoning for

agentic tasks, and enterprise-grade safety.

Coding Accuracy: 74.5% SWE-Bench Dominance

74.5% on SWE-bench makes Claude Opus 4.1 a top-tier coding model. This score represents a significant two-percentage-point jump from its predecessor, Claude Opus 4 (72.5%). The SWE-bench is a highly respected benchmark because it tests a model’s ability to solve real-world software engineering problems from GitHub, making it a credible measure of practical utility. While it slightly trails GPT-5’s 74.9% score, its performance is validated by industry partners. GitHub noted “notable performance gains in multi-file code refactoring,” and the Rakuten Group praised its “surgical precision” in fixing bugs without creating new ones—a common frustration with less refined AI models.

Reasoning & Agentic Tasks

Anthropic explicitly designed the

Claude AI coding model for superior performance in agentic tasks—complex, multi-step workflows that require a high degree of autonomy. A key innovation is its ability to use up to 64,000 tokens for “extended thinking,” a dedicated cognitive workspace that allows the model to break down problems and maintain a coherent plan. This translates into AI agents that can operate independently for hours, performing deep market research or managing complex enterprise workflows with a lower risk of error.

Enterprise-Grade Features

Beyond raw performance, Claude Opus 4.1 is built for the enterprise. It boasts a large 200K token context window, allowing it to work with entire codebases or lengthy financial documents without losing coherence. Furthermore, its safety-first design, a hallmark of Anthropic’s mission, is evident in its improved refusal rate for harmful requests (98.76%) and a 25% reduction in cooperation with misuse requests, all while maintaining an extremely low refusal rate for benign prompts (0.08%). This focus on security and reliability is crucial for regulated industries.

Practical Use Cases

The technical upgrades in Claude Opus 4.1 deliver tangible business value across different professional domains.

For Developers

The model excels at debugging and refactoring large, legacy codebases. Its documented “surgical precision” can dramatically cut down the time senior developers spend on modernizing critical systems. One analysis estimated that saving just eight hours of debugging time per week could generate over $10,000 in annual productivity from a modest subscription cost, delivering an ROI of over 40x.

For Enterprises

For business strategists, the key application is building sophisticated, autonomous AI agents. The model’s enhanced capabilities enable agents that can manage long-horizon tasks, such as orchestrating marketing campaigns or complex cross-functional projects with minimal human oversight. In specialized fields like healthcare, its precision is being explored for medical diagnostics and compliance monitoring in finance.

For Researchers

Researchers can leverage Opus 4.1 as a powerful tool for in-depth analysis of vast information landscapes, from patent databases to academic journals. Its ability to maintain context during long and complex analytical tasks moves its function beyond simple summarization to genuine synthesis and insight generation.

Claude Opus 4.1 vs GPT-5: Feature-by-Feature

The competition between these two models reveals a strategic divergence in the market. While their top-line performance on coding benchmarks is nearly identical, their core strengths, pricing, and ideal use cases are fundamentally different.

| Feature | Claude Opus 4.1 | GPT-5 |

| SWE-Bench Score | 74.5% | 74.9% (with “Thinking” mode) |

| API Pricing (Input/Output) | $15 / $75 per million tokens | ~$1.25 / $10 per million tokens |

| Context Window | 200K tokens | 128K tokens |

| Enterprise Security | Advanced safety, mission-driven culture | Basic encryption |

| Best For | High-precision, complex coding; agentic tasks | Versatile tasks, rapid prototyping, accessibility |

Q&A Snippets

Q: Is Claude Opus 4.1 better than GPT-5 for coding? A: It depends on the task. For complex debugging, legacy systems, and tasks requiring “surgical precision,” Claude Opus 4.1 is often preferred. For rapid prototyping on common tech stacks and general-purpose coding, GPT-5’s speed and cost-effectiveness give it an edge.

Pricing & Access: Is Claude Worth 10× the Cost?

Anthropic’s pricing for Claude Opus 4.1 is unapologetically premium, reinforcing its position as a high-value tool for professionals.

- API Pricing: At $15 per million input tokens and $75 for output, it is roughly 10 times more expensive than GPT-5’s comparable models. For enterprises, Anthropic offers cost-management features like a 50% discount for batch processing and up to 90% savings with prompt caching.

- Subscriptions: Access via the Claude.ai interface is for paying users only.

- Pro Plan (~$20/month): Limited access to Opus 4.1.

- Max Plan (starting at $100/month): Offers 5 to 20 times more usage than Pro, aimed at individual power users.

Key Stat: An analysis suggests a senior developer could generate over $10,000 in annual productivity from a ~$240 Claude subscription, showcasing a massive potential ROI that justifies the premium cost for the right use case.

This pricing structure acts as a strategic filter, ensuring that users of the flagship

Anthropic Claude update are those tackling problems where the return on investment is highest.

Future Outlook: Anthropic vs OpenAI in 2026

The release of Claude Opus 4.1 signals Anthropic’s long-term strategy: to dominate the high-value

enterprise AI market through a focus on safety, reliability, and precision. Anthropic has already hinted at “substantially larger improvements… in the coming weeks,” positioning 4.1 as a stable foundation before the next major leap.

- Anthropic’s Roadmap: The focus will likely remain on expanding agentic workflows, deepening its hold in specialized verticals like healthcare AI, and leveraging its coding prowess to accelerate its own research and development.

- OpenAI’s Strategy: OpenAI will likely continue to pursue broad market dominance with GPT-6, focusing on multimodal capabilities and deep integrations into consumer and business ecosystems.

Market predictions from firms like Menlo Ventures suggest the enterprise AI sector will continue its explosive growth, creating a massive opportunity for premium solutions. Anthropic’s bet is that as AI becomes critical infrastructure, a growing segment of the market will prioritize the safety, reliability, and precision that Claude provides over the lower cost of competitors.

Conclusion: Which Should You Choose?

The choice between the

Claude AI coding model and GPT-5 is no longer about which is “better,” but which is the right tool for the job.

- Choose GPT-5 if your priority is cost-effective versatility. For individual developers, startups, and high-volume applications like content generation or customer service automation, its combination of powerful performance and low cost is unbeatable.

- Choose Claude Opus 4.1 if your priority is security-critical precision. For enterprises operating in regulated industries, or for developers tackling complex, multi-file refactoring and mission-critical automation, Claude’s reliability and surgical accuracy justify its premium price.

The final verdict is clear: Claude wins on precision; GPT-5 wins on accessibility

. The AI race of 2025 has matured, giving users powerful and distinct choices to drive the next wave of innovation.

Ready to see for yourself?

- Try Claude Opus 4.1 on Amazon Bedrock

- Test GPT-5 via the OpenAI API

- GPT-5 vs Claude 4: The Ultimate Coding Comparison