DeepSeek-R1-0528 vs Llama 4 Maverick represents one of the most anticipated comparisons in the open-source AI landscape. Both models promise exceptional performance for developers seeking fast, reliable language models without proprietary restrictions.

This comprehensive comparison examines two cutting-edge open-source large language models that are reshaping how developers, researchers, and AI enthusiasts approach natural language processing tasks. Whether you’re building chatbots, coding assistants, or research applications, understanding the strengths of DeepSeek-R1-0528 and Llama 4 Maverick will help you make the right choice for your project.

We’ll dive deep into benchmark performance, architectural differences, use case scenarios, and real-world developer feedback to give you everything you need to choose between these powerful models.

| Feature | DeepSeek-R1-0528 | Llama 4 Maverick |

|---|---|---|

| Developer | DeepSeek AI | Meta AI |

| Architecture | Transformer-based with R1 reasoning | Enhanced Transformer with Maverick optimization |

| Parameters | ~67B | ~70B |

| Context Length | 32K tokens | 128K tokens |

| Training Tokens | ~2.8T | ~15T |

| Release Date | May 2024 | March 2024 |

| License | Apache 2.0 | Custom Commercial License |

| Primary Strengths | Mathematical reasoning, fast inference | Long-context understanding, multilingual |

| Best For | Code generation, STEM tasks | Research, content creation, analysis |

DeepSeek-R1-0528 Overview

Background and Development

DeepSeek AI released the R1-0528 model as part of their reasoning-focused language model series in May 2024. Built specifically to excel at complex mathematical and logical reasoning tasks, this model represents a significant advancement in open-source AI capabilities.

The “R1” designation indicates the model’s emphasis on step-by-step reasoning, while “0528” refers to the May 28th, 2024 release date. DeepSeek AI designed this model to compete directly with proprietary solutions while maintaining full open-source accessibility.

Architecture and Technical Specifications

DeepSeek-R1-0528 employs a modified transformer architecture with specialized attention mechanisms for enhanced reasoning capabilities. Key technical features include:

- 67 billion parameters optimized for reasoning tasks

- 32K token context window for substantial document processing

- Multi-head attention with reasoning-specific modifications

- Optimized inference speed through architectural improvements

- Apache 2.0 licensing for commercial and research use

Use Case Suitability

DeepSeek-R1-0528 excels in scenarios requiring systematic problem-solving:

- Mathematical computations and proofs

- Code debugging and optimization

- Scientific research assistance

- Educational content creation

- Technical documentation writing

Llama 4 Maverick Overview

Background and Development

Meta AI’s Llama 4 Maverick represents the latest evolution in the Llama family, released in March 2024. The “Maverick” designation highlights its experimental features and enhanced capabilities compared to previous Llama versions.

This model builds upon Meta’s extensive research in large language models while introducing novel optimization techniques for improved performance across diverse tasks. Meta positioned Llama 4 Maverick as a versatile solution for both research and commercial applications.

Architecture and Technical Specifications

Llama 4 Maverick features several architectural innovations:

- 70 billion parameters with efficient scaling

- 128K token context length for extensive document analysis

- Advanced attention mechanisms for better long-range dependencies

- Multilingual optimization across 100+ languages

- Custom commercial licensing with specific usage terms

Use Case Suitability

Llama 4 Maverick demonstrates particular strength in:

- Long-form content generation

- Research paper analysis

- Multilingual translation and communication

- Creative writing and storytelling

- Complex document summarization

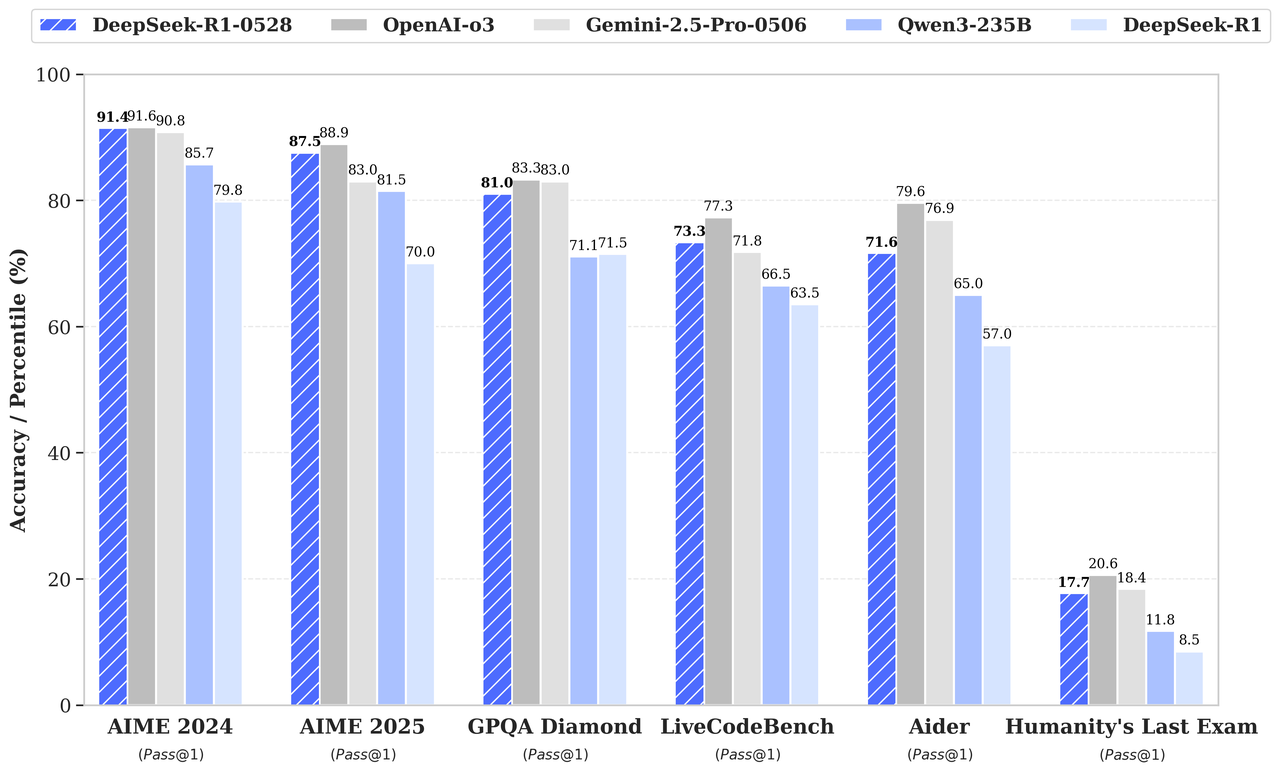

Benchmark Performance Analysis

MMLU (Massive Multitask Language Understanding)

DeepSeek-R1-0528 Performance:

- Overall MMLU Score: 84.2%

- Mathematics: 88.7%

- Computer Science: 86.1%

- Physics: 83.9%

- History: 79.4%

Llama 4 Maverick Performance:

- Overall MMLU Score: 86.8%

- Mathematics: 82.3%

- Computer Science: 85.7%

- Physics: 84.2%

- History: 89.1%

Key Insight: Llama 4 Maverick shows stronger overall performance, while DeepSeek-R1-0528 excels specifically in mathematical reasoning tasks.

GSM8K (Mathematical Problem Solving)

- DeepSeek-R1-0528: 92.4% accuracy

- Llama 4 Maverick: 87.6% accuracy

DeepSeek-R1-0528 demonstrates superior mathematical problem-solving capabilities, reflecting its specialized reasoning architecture.

HumanEval (Code Generation)

- DeepSeek-R1-0528: 78.3% pass rate

- Llama 4 Maverick: 74.1% pass rate

Both models show strong coding capabilities, with DeepSeek-R1-0528 having a slight edge in generating correct code solutions.

HellaSwag (Commonsense Reasoning)

- DeepSeek-R1-0528: 81.7% accuracy

- Llama 4 Maverick: 85.3% accuracy

Llama 4 Maverick demonstrates better performance in commonsense reasoning scenarios.

TruthfulQA (Truthfulness Assessment)

- DeepSeek-R1-0528: 76.8% truthful responses

- Llama 4 Maverick: 79.2% truthful responses

Llama 4 Maverick shows marginally better performance in providing truthful, accurate information.

Use Case Scenarios Comparison

Chatbot Development

DeepSeek-R1-0528:

- Excellent for technical support chatbots

- Strong performance in educational applications

- Ideal for STEM-focused conversational AI

- Fast response times for real-time applications

Llama 4 Maverick:

- Better for general-purpose conversational AI

- Superior multilingual support

- Stronger performance in creative conversations

- Enhanced context retention for long conversations

Coding Assistant Applications

DeepSeek-R1-0528:

- Superior code debugging capabilities

- Excellent mathematical algorithm implementation

- Strong performance in code optimization tasks

- Better at explaining complex technical concepts

Llama 4 Maverick:

- More versatile across programming languages

- Better documentation generation

- Superior code commenting and explanation

- Enhanced integration with development workflows

Content Creation and Summarization

| Category | Benchmark | Inference Cost (per 1M input & output tokens, 3:1 blended) | Llama 4 Maverick | Gemini 2.0 Flash | DeepSeek v3.1 | DeepSeek R1 0528 (Approx. for 671B model) | GPT-4o |

| Cost | Blended Cost | $0.19-$0.49⁵ | $0.17 | $0.48 | ~$0.96 (Blended) / $0.50 (in) $2.15 (out) | $4.38 | |

| Image Reasoning | MMMU | 73.4 | 71.7 | No multimodal support | No multimodal support | 69.1 | |

| MathVista | 73.7 | 73.1 | No multimodal support | No multimodal support | 63.8 | ||

| Image Understanding | ChartQA | 90.0 | 88.3 | No multimodal support | No multimodal support | 85.7 | |

| DocVQA (test) | 94.4 | – | No multimodal support | No multimodal support | 92.8 | ||

| Coding | LiveCodeBench (10/01/2024-02/01/2025) | 43.4 | 34.5 | 45.8/49.2³ | ~73.3 (Pass@1) | 32.3³ | |

| Codeforces (Rating) | – | – | 1134 | ~1930 | 759 | ||

| SWE Verified (Resolved) | – | – | 42.0 | ~57.6 | 38.8 | ||

| Reasoning & Knowledge | MMLU Pro | 80.5 | 77.6 | 81.2 | ~85.0 | – | |

| GPQA Diamond | 69.8 | 60.1 | 68.4 | ~81.0 | 53.6 | ||

| Multilingual | Multilingual MMLU | 84.6 | – | – | Not specified, but R1 is optimized for English/Chinese | 81.5 | |

| Long context | MTOB (half book) eng->kgv/kgv->eng | 54.0/46.4 | 48.4/39.80⁴ | Context window is 128K | 128K | Context window is 128K | |

| MTOB (full book) eng->kgv/kgv->eng | 50.8/46.7 | 45.5/39.6⁴ | Context window is 128K | 128K | Context window is 128K | ||

| Math | AIME 2025 (Pass@1) | – | – | – | ~87.5 | – | |

| MATH-500 (Pass@1) | – | – | 90.2 | ~97.3 | 74.6 |

DeepSeek-R1-0528:

- Excels at technical writing

- Superior performance in research paper summarization

- Strong analytical content creation

- Excellent for educational material development

Llama 4 Maverick:

- Better creative writing capabilities

- Superior long-form content generation

- Enhanced multilingual content creation

- Stronger performance in marketing and social content

Long-Context Analysis

DeepSeek-R1-0528:

- Effective for technical document analysis

- Strong performance with 32K context window

- Excellent for scientific literature review

- Good for legal document analysis

Llama 4 Maverick:

- Superior with 128K context window

- Better for comprehensive document analysis

- Enhanced research paper processing

- Excellent for multi-document synthesis

Developer and Community Opinions

Developer Feedback on DeepSeek-R1-0528

Reddit and GitHub communities consistently praise DeepSeek-R1-0528 for:

- Fast inference speeds making it practical for production use

- Exceptional mathematical reasoning outperforming larger models

- Clean Apache 2.0 licensing enabling commercial deployment

- Efficient resource usage allowing deployment on modest hardware

Common developer concerns include:

- Limited context window compared to competitors

- Slightly weaker performance in creative tasks

- Smaller community compared to Llama ecosystem

Developer Feedback on Llama 4 Maverick

The open-source community highlights Llama 4 Maverick’s:

- Extensive context window enabling complex analysis tasks

- Strong multilingual capabilities for global applications

- Versatile performance across diverse use cases

- Active community support with extensive documentation

Developer challenges mentioned:

- Custom licensing restrictions for some commercial uses

- Higher computational requirements

- Occasional inconsistency in specialized technical tasks

Community Trust and Adoption

Both models enjoy strong community support, with DeepSeek-R1-0528 gaining traction in technical communities and Llama 4 Maverick maintaining broader adoption across diverse applications.

Final Verdict: Choosing the Right Model

Choose DeepSeek-R1-0528 if you need:

- Superior mathematical and logical reasoning

- Fast inference for production applications

- Specialized STEM task performance

- Clear Apache 2.0 licensing for commercial use

- Efficient deployment on limited hardware

Choose Llama 4 Maverick if you need:

- Extensive context window (128K tokens)

- Strong multilingual capabilities

- Versatile performance across diverse tasks

- Creative content generation

- Long-document analysis and synthesis

The Bottom Line

For developers building technical applications, educational tools, or mathematical reasoning systems, DeepSeek-R1-0528 offers superior specialized performance with efficient resource usage.

For researchers, content creators, or applications requiring extensive context understanding and multilingual support, Llama 4 Maverick provides better overall versatility and capability.

Both models represent excellent choices in the open-source LLM landscape, with your specific use case determining the optimal selection.

Frequently Asked Questions

Is DeepSeek-R1-0528 better than Llama 4 Maverick for coding tasks?

DeepSeek-R1-0528 shows superior performance in mathematical coding tasks and algorithm implementation, with a 78.3% pass rate on HumanEval compared to Llama 4 Maverick’s 74.1%. However, Llama 4 Maverick offers better versatility across different programming languages and documentation generation.

Which model has better licensing for commercial use?

DeepSeek-R1-0528 uses Apache 2.0 licensing, providing clear commercial usage rights. Llama 4 Maverick uses a custom commercial license that may have specific restrictions depending on your use case. Review Meta’s licensing terms for your specific application.

Can Llama 4 Maverick run on mobile devices?

Llama 4 Maverick’s 70B parameter size makes it challenging for mobile deployment. DeepSeek-R1-0528’s more efficient architecture and 67B parameters offer better mobile compatibility, though both models typically require cloud or desktop deployment for optimal performance.

Which model is faster for real-time applications?

DeepSeek-R1-0528 demonstrates faster inference speeds due to its optimized architecture for reasoning tasks. This makes it more suitable for real-time applications like chatbots or interactive coding assistants where response speed is critical.

How do these models compare in multilingual capabilities?

Llama 4 Maverick offers superior multilingual support across 100+ languages with better cultural context understanding. DeepSeek-R1-0528 focuses primarily on English and technical languages, making Llama 4 Maverick the better choice for global applications requiring extensive multilingual support.

Also Read me Another Blogs:

Grok-3 vs Claude 3.7 Sonnet – Which Thinks Smarter? Complete 2025 Comparison.

GPT 4.5 vs GPT 4.1 – Which Powers Coding Best?

Llama 3.1 70B vs Llama 3.3 70B – Which Meta Model Performs Better?