Compare DeepSeek R1 vs GPT-3.5 Turbo in reasoning, coding, and cost for 2025. Discover which AI model suits developers and businesses with the latest benchmark data. Choosing the right AI model can make or break your project’s success, whether you’re a developer building innovative applications or a business optimizing workflows. DeepSeek R1 vs GPT-3.5 Turbo represents a compelling face-off between a free, open-source model and a paid, industry-standard solution. DeepSeek R1, developed by Chinese AI startup DeepSeek, promises advanced reasoning and coding capabilities at no cost. Meanwhile, OpenAI’s GPT-3.5 Turbo offers proven reliability for a price. This comparison dives into their reasoning, coding, and cost differences, leveraging 2025 benchmark data to help you decide which model aligns with your needs as a developer or business.

Quick Comparison Table

| Model | Parameters | Context Window | Release Date | Best For |

|---|---|---|---|---|

| DeepSeek R1 | 671B (37B active) | 128K tokens | January 2025 | Reasoning, coding, cost-free projects |

| GPT-3.5 Turbo | 175B (estimated) | 16.4K tokens | January 2024 | General NLP, chatbots, paid reliability |

Model Overviews DeepSeek R1 vs GPT-3.5 Turbo

DeepSeek R1

DeepSeek R1, launched in January 2025 by DeepSeek, is a Mixture-of-Experts (MoE) model with 671 billion parameters, activating 37 billion per token for efficiency. Its architecture emphasizes reasoning through large-scale reinforcement learning (RL) and supervised fine-tuning (SFT), enabling capabilities like self-verification and chain-of-thought (CoT) reasoning. Open-sourced under the MIT license, DeepSeek R1 is freely accessible via platforms like Hugging Face and DeepSeek’s API, making it ideal for developers seeking cost-free, high-performance AI. Its 128K-token context window supports complex tasks, and its distilled variants (1.5B to 70B parameters) cater to diverse hardware capabilities.

GPT-3.5 Turbo

GPT-3.5 Turbo, an evolution of OpenAI’s GPT-3 family, is a 175-billion-parameter model (estimated) optimized for natural language processing (NLP). Released in January 2024, it features a 16.4K-token context window and is accessible via OpenAI’s API or Azure OpenAI Service. Known for its versatility, GPT-3.5 Turbo excels in chatbots, content generation, and code assistance. DeepSeek R1 vs GPT-3.5 Turbo Its paid model ensures consistent updates and support, appealing to businesses prioritizing reliability over cost. Recent updates, like gpt-3.5-turbo-0125, improve accuracy and fix non-English text encoding issues.

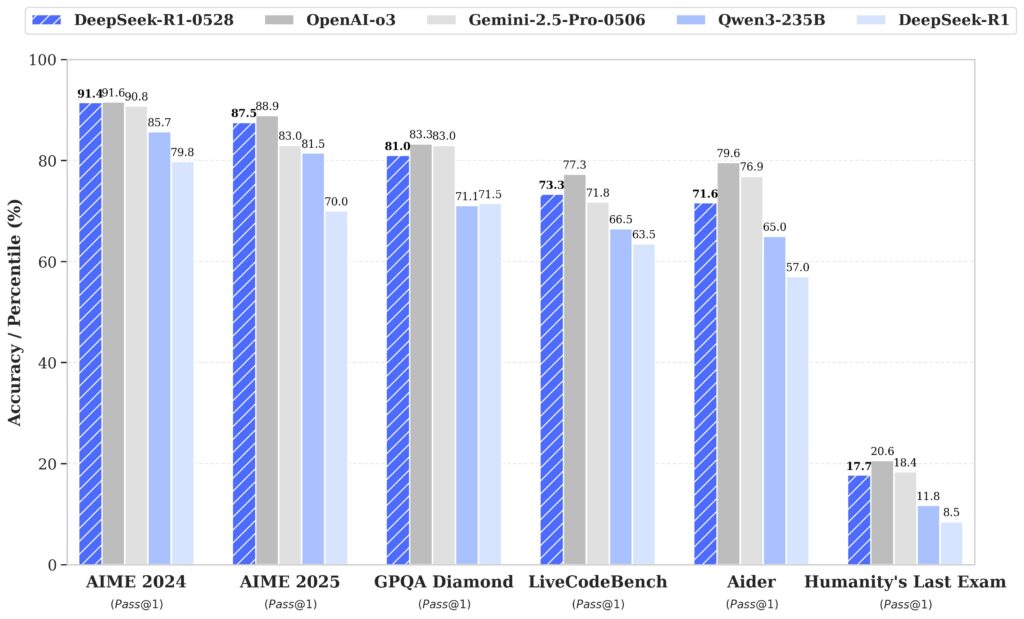

Benchmark Comparison DeepSeek R1 vs GPT-3.5 Turbo

MMLU (Massive Multitask Language Understanding)

MMLU tests knowledge across 57 subjects, assessing general reasoning and academic proficiency.

- DeepSeek R1: 90.8% Pass@1 (Source)

- GPT-3.5 Turbo: 70% (5-shot) (Source)

DeepSeek R1’s superior score suggests stronger general knowledge and reasoning, likely due to its RL-focused training.

MMLU-Pro

MMLU-Pro evaluates advanced reasoning in professional and academic contexts.

- DeepSeek R1: 84% Exact Match (Source)

- GPT-3.5 Turbo: Data not available

DeepSeek R1’s high score indicates its capability in handling complex, specialized reasoning tasks, while GPT-3.5 Turbo lacks comparable data.

GPQA (Graduate-level Professional and Academic QA)

GPQA measures reasoning in graduate-level questions.

- DeepSeek R1: 71.5% Pass@1 (Source)

- GPT-3.5 Turbo: Data not available

DeepSeek R1’s performance underscores its strength in advanced reasoning, with no direct comparison for GPT-3.5 Turbo.

MATH

MATH evaluates mathematical problem-solving.

- DeepSeek R1: 97.3% accuracy on MATH-500 (Source)

- GPT-3.5 Turbo: 43.1% (0-shot) (Source)

DeepSeek R1 significantly outperforms GPT-3.5 Turbo in mathematical reasoning, reflecting its specialized training.

HumanEval (Coding)

HumanEval tests code generation accuracy.

- DeepSeek R1: 96.3% (Source)

- GPT-3.5 Turbo: Data not directly provided, but referenced as gpt-3.5-turbo-0125

DeepSeek R1 vs GPT-3.5 Turbo DeepSeek R1’s high score suggests robust coding capabilities, though direct comparisons with GPT-3.5 Turbo are limited.

Use Case Breakdown DeepSeek R1 vs GPT-3.5 Turbo

| GPT-3.5 Turbo API pricing | ||

| Model | Input | Output |

| Gpt-3.5-turbo-1106 | $1.00 per 1 million tokens | $2.00 per 1 million tokens |

| Gpt-3.5-turbo-0125 | $0.50 per 1 million tokens | $1.50 per 1 million tokens |

| Gpt-3.5-turbo-instruct | $1.50 per 1 million tokens | $2.00 per 1 million tokens |

Chatbots

- DeepSeek R1: Its 128K-token context window supports extended conversations, and its reasoning focus ensures coherent responses. Its open-source nature allows customization for niche chatbot applications.

- GPT-3.5 Turbo: Widely used in chatbots like ChatGPT, its 16.4K-token context window is sufficient for most conversational tasks. Its reliability makes it a go-to for businesses needing polished interactions.

Coding

- DeepSeek R1: Excels in generating functional code, as demonstrated by creating a playable Tetris game in Python (Source). Its open-source flexibility suits developers customizing solutions.

- GPT-3.5 Turbo: Powers tools like GitHub Copilot, offering reliable code completion and debugging. Its established ecosystem benefits businesses with existing OpenAI integrations.

Document AI

- DeepSeek R1: Its reasoning capabilities make it suitable for analyzing complex documents, though specific use cases are less documented.

- GPT-3.5 Turbo: Proven in extracting information from scientific articles, with studies showing effectiveness for simple data extraction (Source).

Creative Writing

- DeepSeek R1: Less emphasized for creative tasks but capable due to its reasoning and coherence.

- GPT-3.5 Turbo: Strong in generating emails, marketing content, and creative text, leveraging its NLP strengths.

Real-World & Dev Feedback of DeepSeek R1 vs GPT-3.5 Turbo

DeepSeek R1

- Medium Review: “The transparency of the reasoning the LLM used to arrive at its answer is impressive” (Source).

- Writesonic Blog: Notes DeepSeek R1’s #1 spot on Apple’s App Store, highlighting its popularity (Source).

- Indian Express: Successfully generated a Tetris game, showcasing practical coding utility (Source).

- DigiAlps: Praises its local deployment flexibility, ideal for privacy-conscious developers (Source).

GPT-3.5 Turbo

- Reddit: Users found GPT-3.5 Turbo less detailed and accurate than GPT-4, particularly in formatting and content quality (Source).

- PMC Study: Effective for simple information extraction but requires human review for complex data (Source).

- TechTarget: Highlights its versatility but notes 40% less factual accuracy than GPT-4 (Source).

Final Verdict

DeepSeek R1 vs GPT-3.5 Turbo presents a trade-off between cost and ecosystem reliability. DeepSeek R1 is the better choice for developers and businesses prioritizing advanced reasoning, coding, and cost-free access. Its superior benchmark scores (e.g., 90.8% MMLU, 97.3% MATH) and open-source flexibility make it ideal for innovative projects and budget-conscious teams. GPT-3.5 Turbo suits businesses needing reliable, plug-and-play NLP solutions within OpenAI’s ecosystem, despite its costs ($0.50/$1.50 per 1M tokens). For startups and researchers, DeepSeek R1’s performance and zero cost are hard to beat.

FAQ

Q: Is DeepSeek R1 completely free to use?

A: Yes, DeepSeek R1 is open-source under the MIT license, allowing free commercial and academic use (Source).

Q: How does GPT-3.5 Turbo’s pricing work?

A: GPT-3.5 Turbo costs $0.50 per 1M input tokens and $1.50 per 1M output tokens for the gpt-3.5-turbo-0125 variant (Source).

Q: Which model is better for coding?

A: DeepSeek R1 shows stronger coding performance (96.3% HumanEval) and real-world success (e.g., Tetris game), while GPT-3.5 Turbo is reliable for code completion but lacks direct benchmark data.

Q: Can DeepSeek R1 handle large documents?

A: Yes, its 128K-token context window supports large documents, surpassing GPT-3.5 Turbo’s 16.4K tokens.

Q: Is GPT-3.5 Turbo suitable for non-English tasks?

A: GPT-3.5 Turbo’s gpt-3.5-turbo-0125 variant fixes non-English encoding issues, making it suitable for multilingual tasks (Source).

Source:

- DeepSeek AI – DeepSeek R1 Official Repository

- LLMs Leaderboard by RankLLMS

- DeepSeek API Documentation

- Hugging Face – DeepSeek R1 Model