Explore DeepSeek R1 vs GPT-4 Turbo in 2025: Compare reasoning, coding, and cost for developers and businesses. Discover which AI model excels in benchmarks and value.

Introduction

In the fast-evolving AI landscape of 2025, choosing the right large language model (LLM) can make or break your project. DeepSeek R1 vs GPT-4 Turbo is a critical comparison for developers and businesses seeking the best balance of reasoning, coding prowess, and cost efficiency. DeepSeek R1, an open-source reasoning model, challenges OpenAI’s GPT-4 Turbo, a proprietary powerhouse known for speed and versatility. This article dives into their architectures, benchmark performance, real-world use cases, and costs to help you decide which model suits your needs. With 2025 benchmark data, you’ll get a clear picture of how these models stack up for technical and enterprise applications.

Why does this matter? Developers need tools that deliver accurate code and reasoning, while businesses prioritize scalability and cost. By comparing DeepSeek R1 vs GPT-4 Turbo,across MMLU, HumanEval, and other benchmarks, you’ll gain actionable insights to optimize your AI strategy.

Quick Comparison Table

| Model | Parameters | Context Window | Release Date | Best For |

|---|---|---|---|---|

| DeepSeek R1 | 671B (37B active) | 128K tokens | January 21, 2025 | Reasoning, coding, cost-sensitive applications |

| GPT-4 Turbo | Not disclosed | 128K tokens | December 2023 | Speed, multimodal tasks, enterprise integration |

Model Overviews

DeepSeek R1: The Reasoning Specialist

DeepSeek R1, launched by DeepSeek AI on January 21, 2025, is an open-source model designed for complex reasoning and coding tasks. Its Mixture-of-Experts (MoE) architecture, with 671 billion parameters but only 37 billion active per query, optimizes computational efficiency. This selective activation, powered by Multi-Head Latent Attention (MLA), enables faster processing for tasks like mathematical problem-solving and code generation. DeepSeek R1’s training combines two stages of reinforcement learning (RL) and supervised fine-tuning (SFT), emphasizing chain-of-thought (CoT) reasoning for transparent, step-by-step logic.

Key features include:

- Exceptional performance in math and coding benchmarks

- Open-source accessibility via Hugging Face

- Cost-effective API pricing

- Improved performance in the R1-0528 update (May 2025), with reduced hallucinations and JSON support

DeepSeek R1’s focus on reasoning makes it ideal for developers tackling technical challenges and businesses seeking affordable AI solutions. However, it lacks multimodal capabilities, limiting its use in image or audio processing.

GPT-4 Turbo: The Speed and Versatility Champion

GPT-4 Turbo, released by OpenAI in December 2023, is a proprietary model optimized for speed and broad applicability. While OpenAI doesn’t disclose its parameter count, it’s built on a dense transformer architecture, supporting a 128K-token context window and up to 4,096 tokens per response. Its strengths lie in rapid response times (232ms latency) and robust enterprise integration, making it a go-to for real-time applications like chatbots and content creation.

Key features include:

- High-speed processing for real-time applications

- Comprehensive API support via OpenAI and Azure

- Strong performance across general knowledge and conversational tasks

- Proven reliability for enterprise deployments

In comparesion of DeepSeek R1 vs GPT-4 Turbo, GPT-4 Turbo’s versatility suits businesses needing fast, scalable solutions, but its higher costs and slightly weaker reasoning performance compared to specialized models like DeepSeek R1 are notable drawbacks.

Benchmark Comparison

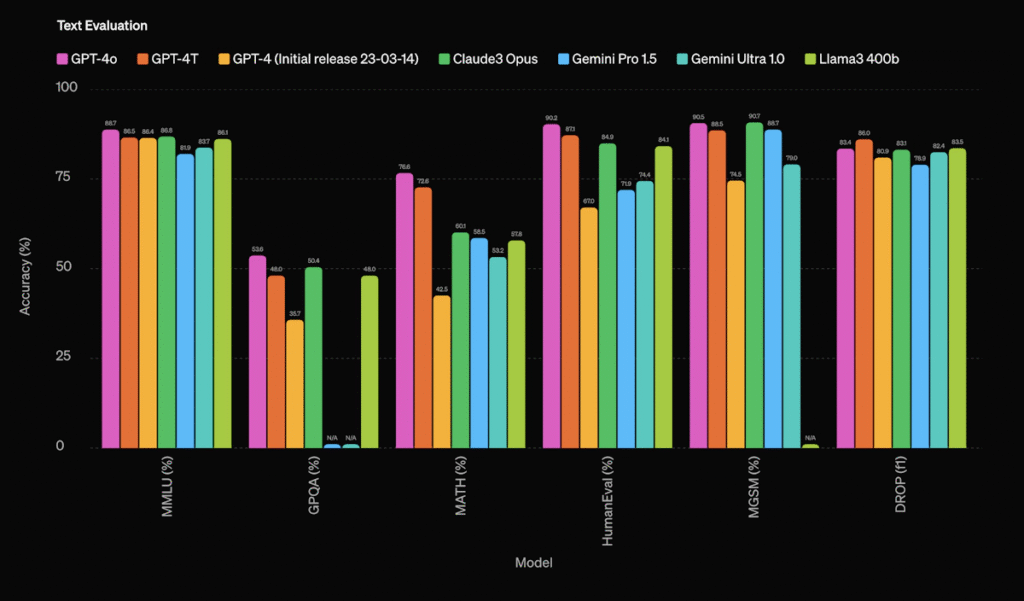

MMLU (Massive Multitask Language Understanding)

In comparesion of DeepSeek R1 vs GPT-4 Turbo, MMLU tests general knowledge across 57 subjects. DeepSeek R1 scores 88.75%, slightly outperforming GPT-4 Turbo’s 86.4%. DeepSeek’s edge comes from its reasoning-focused training, excelling in STEM-related tasks. GPT-4 Turbo, while strong, prioritizes speed over deep reasoning, leading to a marginal gap in complex subjects.

HumanEval (Coding Proficiency)

In comparesion of DeepSeek R1 vs GPT-4 Turbo, HumanEval evaluates code generation quality. DeepSeek R1 achieves 90.2%, surpassing GPT-4 Turbo’s 85.6%. DeepSeek’s ability to optimize code for memory efficiency and logical structuring gives it an advantage in algorithm design and debugging. GPT-4 Turbo performs well but excels more in code explanation than generation.

MATH-500 (Mathematical Problem-Solving)

In comparesion of DeepSeek R1 vs GPT-4 Turbo, DeepSeek R1 dominates MATH-500 with a 97.3% accuracy rate, compared to GPT-4 Turbo’s 91.7%. DeepSeek’s CoT reasoning allows it to break down complex math problems effectively, scaling performance with more tokens (e.g., 66.7% accuracy at 100K+ tokens on AIME). GPT-4 Turbo, while capable, struggles with deep mathematical reasoning.

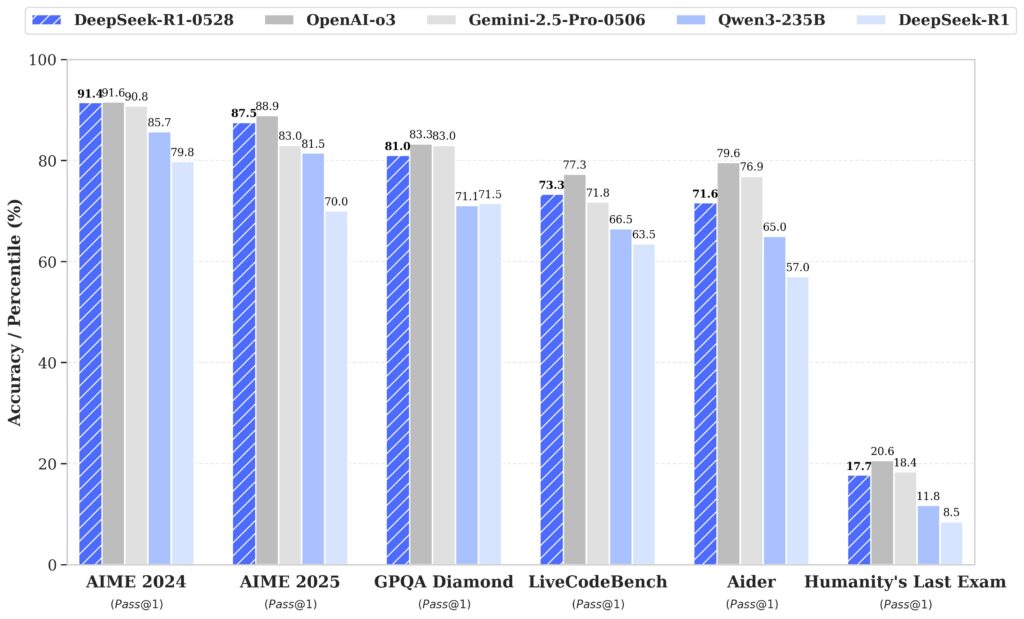

AIME 2024 (Advanced Math)

In comparesion of DeepSeek R1 vs GPT-4 Turbo, In AIME 2024, DeepSeek R1 scores 79.8%, edging out GPT-4 Turbo’s 74.2%. DeepSeek’s RL-based training enhances its ability to tackle high-school-level math problems, making it a top choice for academic applications.

GPQA (General Purpose Question Answering)

In comparesion of DeepSeek R1 vs GPT-4 Turbo, GPT-4 Turbo slightly leads in GPQA with 74.9% versus DeepSeek R1’s 71.5%. This reflects GPT-4 Turbo’s strength in fact-based retrieval and general knowledge, areas where its dense architecture shines.

| Benchmark | DeepSeek R1 | GPT-4 Turbo | Winner |

|---|---|---|---|

| MMLU | 88.75% | 86.4% | DeepSeek R1 |

| HumanEval | 90.2% | 85.6% | DeepSeek R1 |

| MATH-500 | 97.3% | 91.7% | DeepSeek R1 |

| AIME 2024 | 79.8% | 74.2% | DeepSeek R1 |

| GPQA | 71.5% | 74.9% | GPT-4 Turbo |

Use Case Breakdown

Chatbots

GPT-4 Turbo excels in conversational AI due to its low latency (232ms) and natural language fluency. It’s ideal for customer service chatbots requiring real-time responses. DeepSeek R1, while capable, has slower inference for complex queries, making it less suited for high-speed conversational tasks.

Coding

DeepSeek R1 is the preferred choice for coding tasks like algorithm design and debugging. Its 90.2% HumanEval score and memory-efficient code generation make it a favorite among developers. GPT-4 Turbo is better for code explanation and documentation, appealing to teams needing clear, user-friendly outputs.

Agents

In comparesion of DeepSeek R1 vs GPT-4 Turbo, For AI agents requiring logical reasoning, DeepSeek R1’s CoT capabilities shine. It excels in tasks like financial modeling or decision-making systems, where transparency in reasoning is critical. GPT-4 Turbo suits simpler agent tasks but struggles with deep reasoning.

Document AI

DeepSeek R1’s technical writing prowess makes it ideal for generating precise documentation, such as API guides or research papers. GPT-4 Turbo is better for creative document tasks, like marketing content, due to its fluent language generation.

Creative Writing

GPT-4 Turbo’s probabilistic generation model excels in creative writing, producing engaging and contextually rich content. DeepSeek R1, while competent, prioritizes structured outputs over creative flair.

Real-World & Dev Feedback

Developers on X praise DeepSeek R1’s cost efficiency and reasoning capabilities. One user noted, “DeepSeek R1 rivals GPT-4o performance at 93% lower inference cost, reducing hardware needs” (@AI_EmeraldApple). On Reddit, a developer testing DeepSeek R1 on C/C++ tasks reported it “converted loops to AVX with no issues, outperforming GPT-4 Turbo in memory efficiency” (u/SunilKumarDash).

Hugging Face discussions highlight DeepSeek R1’s open-source appeal, with developers appreciating its flexibility for fine-tuning. However, some note its slower inference for complex tasks compared to GPT-4 Turbo’s rapid responses. GPT-4 Turbo users value its enterprise-grade support and speed, particularly for real-time applications, but criticize its higher costs.

Cost Comparison

| Model | Input Tokens (per 1M) | Output Tokens (per 1M) | Cost Advantage |

|---|---|---|---|

| DeepSeek R1 | $0.50 | $2.00 | 14.6x cheaper |

| GPT-4 Turbo | $7.30 | $29.20 | Standard pricing |

DeepSeek R1 is 14.6 times cheaper than GPT-4 Turbo, with input costs at $0.50 per million tokens and output at $2.00, versus GPT-4 Turbo’s $7.30 and $29.20, respectively. For high-volume applications, like processing 10 million tokens daily, DeepSeek R1 costs $5-$20/day, while GPT-4 Turbo costs $73-$292/day. DeepSeek’s open-source nature also allows self-hosting, further reducing costs.

Total Cost of Ownership:

- DeepSeek R1: Lower API costs, no licensing fees, and self-hosting options make it ideal for startups and cost-sensitive projects. However, setup complexity may require technical expertise.

- GPT-4 Turbo: Higher costs but simplified setup via OpenAI’s infrastructure. Enterprise support and reliability reduce long-term maintenance overhead.

Final Verdict

In comparesion of DeepSeek R1 vs GPT-4 Turbo, For developers and businesses prioritizing reasoning and coding, DeepSeek R1 is the better choice. Its superior performance in MATH-500 (97.3%), HumanEval (90.2%), and AIME 2024 (79.8%), combined with 14.6x lower costs, makes it ideal for technical tasks and cost-conscious projects. Its open-source nature offers flexibility for customization, perfect for startups and researchers.

For speed-driven or enterprise applications, GPT-4 Turbo excels. Its 232ms latency and robust API support suit real-time chatbots and general-purpose tasks. Businesses needing proven reliability and enterprise integration will find GPT-4 Turbo’s ecosystem advantageous, despite higher costs.

Evaluate your needs: choose DeepSeek R1 for reasoning and coding efficiency, or GPT-4 Turbo for speed and versatility. For more AI model comparisons, check out RankLLMs’ Claude 4 vs GPT-4o analysis.

FAQ

Which model is better for coding in 2025?

DeepSeek R1 outperforms GPT-4 Turbo in coding tasks, scoring 90.2% on HumanEval versus 85.6%. It excels in algorithm design and debugging, while GPT-4 Turbo is better for code explanation.

How do DeepSeek R1 and GPT-4 Turbo compare in cost?

DeepSeek R1 is 14.6x cheaper, with $0.50/$2.00 per million input/output tokens versus GPT-4 Turbo’s $7.30/$29.20. DeepSeek’s open-source model also allows cost-free self-hosting.

Is DeepSeek R1 faster than GPT-4 Turbo?

No, GPT-4 Turbo is faster, with 232ms latency for real-time tasks. DeepSeek R1’s reasoning focus can slow inference for complex queries.

Which model is better for reasoning tasks?

DeepSeek R1 excels in reasoning, outperforming GPT-4 Turbo in MATH-500 (97.3% vs 91.7%) and AIME 2024 (79.8% vs 74.2%) due to its CoT architecture.

Can DeepSeek R1 handle multimodal tasks?

No, DeepSeek R1 is text-only, while GPT-4 Turbo supports text, images, and audio, making it better for multimodal applications.

Sources

- DeepSeek AI – DeepSeek R1 Official Repository

- LLMs Leaderboard by RankLLMS

- DeepSeek API Documentation

- Hugging Face – DeepSeek R1 Model

- DocsBot AI – Model Comparison Tool

- Eden AI – GPT-4o vs DeepSeek R1 Analysis

- Medium – Performance Metrics Analysis

- Analytics Vidhya – AI Model Comparison

- TechCrunch – DeepSeek R1 Hardware Requirements

- Reddit – Notes on DeepSeek V3

- X Post by @AI_EmeraldApple

Read more at: RankLLMs