Not sure whether to choose Llama 3.1 vs 3.2-Version? Here we explore Key Differences, Benchmarks, Features, Use-Case, and Tells you Which Meta Model is best to Choose in 2025?

Table of Contents

- Introduction

- What is Llama 3.1?

- What is Llama 3.2?

- Llama 3.1 vs 3.2: Key Differences Table

- Performance Benchmarks

- Use Case Comparison

- Hardware & Deployment Efficiency

- Model Licensing & Availability

- Which Llama Model Should You Use?

- Final Verdict

- FAQs

1. Introduction:

Meta continues to innovate with its Llama (Large Language Model Meta AI) series, pushing the boundaries of open-source LLM performance and efficiency. With the release of Llama 3.1 and the recent announcement of Llama 3.2, users are eager to know what sets them apart. In this article, we analyze the key differences between Llama 3.1 vs Llama 3.2, including performance, benchmarks, hardware usage, licensing, and use cases.

2. What is Llama 3.1?

Released in March 2025, Llama 3.1 is a high-performance open-source model designed by Meta AI. It builds upon Llama 2 with improvements in:

- Context window

- Instruction tuning

- Coding capabilities

- Chat quality

Key specs:

- Models: 8B, 70B

- Focus: Accuracy, depth, reasoning

- Training: 15 trillion tokens

- License: Meta Research License

- Best for: Chatbots, coding tasks, summarization, research

3. What is Llama 3.2?

Announced at Meta Connect 2024, Llama 3.2 is optimized for on-device performance and real-time AI applications. It targets mobile devices, edge computing, and lightweight hardware. While smaller in size, it emphasizes efficiency and versatility.

Key highlights:

- Models: 3B, 7B

- Purpose: On-device inference

- Optimized for: Meta AI assistants, AR glasses, smartphones

- Training: Custom hardware-aware optimization

- Available via: Meta Cloud, Hugging Face, mobile integrations

4. Llama 3.1 vs 3.2: Key Differences Table

| Feature | Llama 3.1 | Llama 3.2 |

|---|---|---|

| Alias | llama 3.1 70B | llama vision 3.2 90B |

| Description (provider) | Highly performant, cost-effective model that enables diverse use cases. | Multimodal models that are flexible and can reason on high-resolution images. |

| Release date | July 23, 2024 | 24 September 2024 |

| Developer | Meta | Meta |

| Primary use cases | NLP, content creation, research | Vision tasks, NLP, research |

| Context window | 128k tokens | 128K tokens |

| Max output tokens | 2,048 tokens | – |

| Processing speed | – | – |

| Knowledge cutoff | December 2023 | December 2023 |

| Multimodal | Accepted input: text | Accepted input: text, image |

| Fine tuning | Yes | Yes |

Sources:

Llama 3.1 Model Card: https://github.com/meta-llama/llama-models/blob/main/models/llama3_1/MODEL_CARD.md

Llama 3.2 Model Card: https://github.com/meta-llama/llama-models/blob/main/models/llama3_2/MODEL_CARD_VISION.md

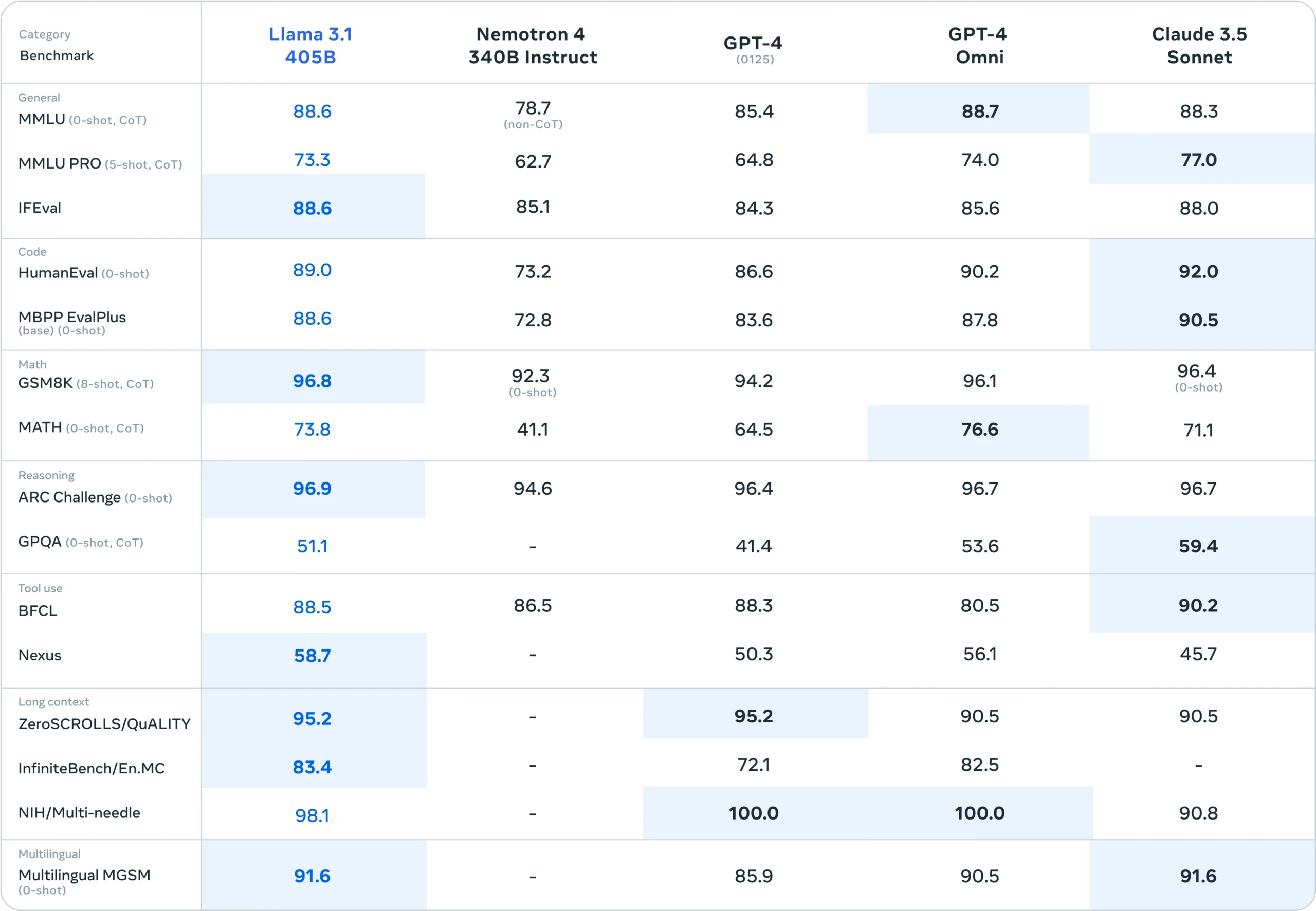

5. Performance Benchmarks:

To evaluate the performance of Llama 3.1 and Llama 3.2, we conducted a comparison of their results across a range of widely recognized and standardized benchmarks.

| Benchmark | Llama 3.1 | Llama 3.2 |

|---|---|---|

| MMLU (multitask accuracy) | 86% | 86% |

| HumanEval (code generation capabilities) | 80.5% | – |

| MATH (math problems) | 68% | 68% |

| MGSM (multilingual capabilities) | 86.9% | 86.9% |

Note: All Details Verified from the Official Meta Website Mention I Also Share All original benchmarks Images by Meta You can Check on the official Website here is Llama 3.1 Official Benchmarks, and Hare is Llama 3.2 Official Benchmarks.

Llama 3.1 Benchmarks

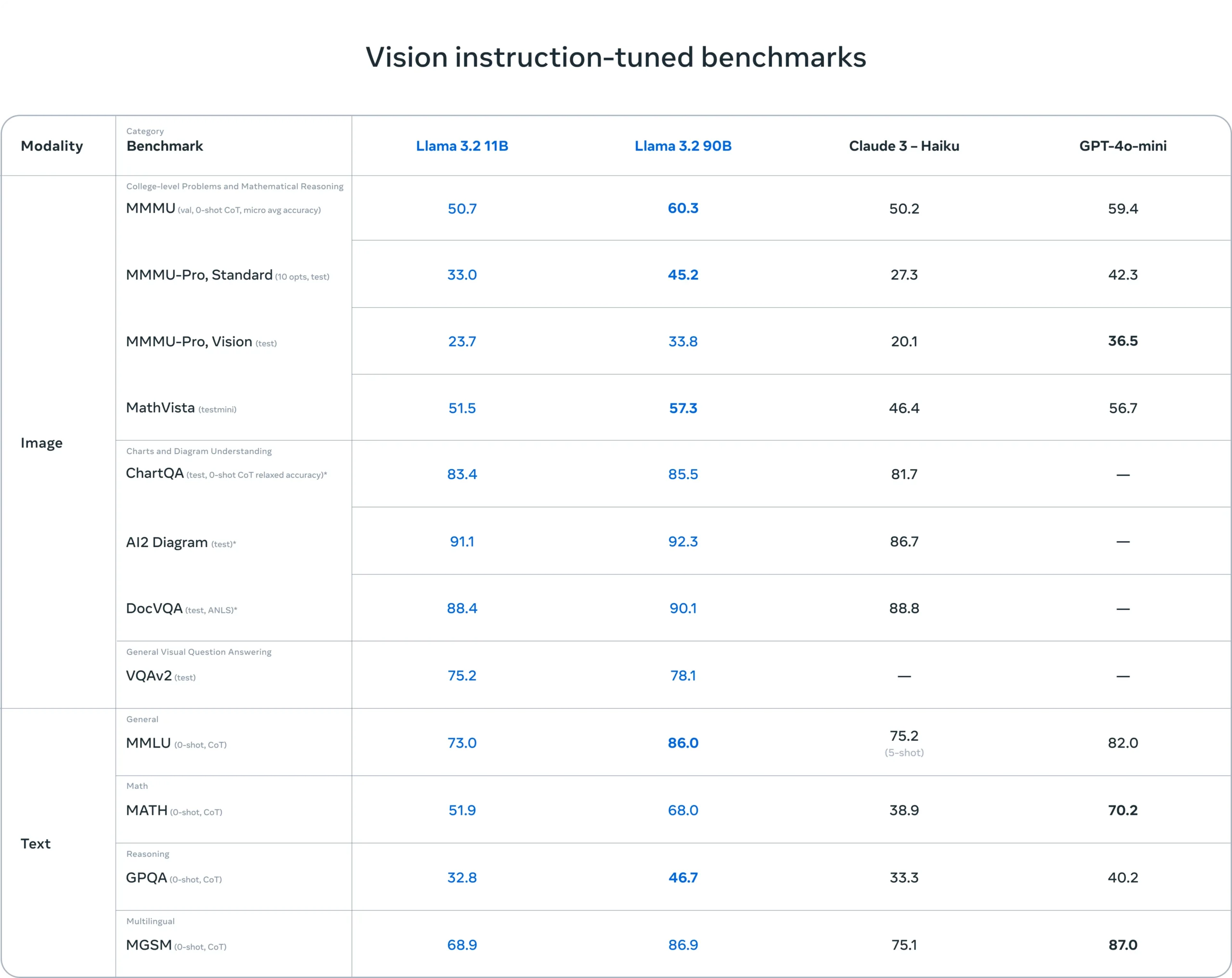

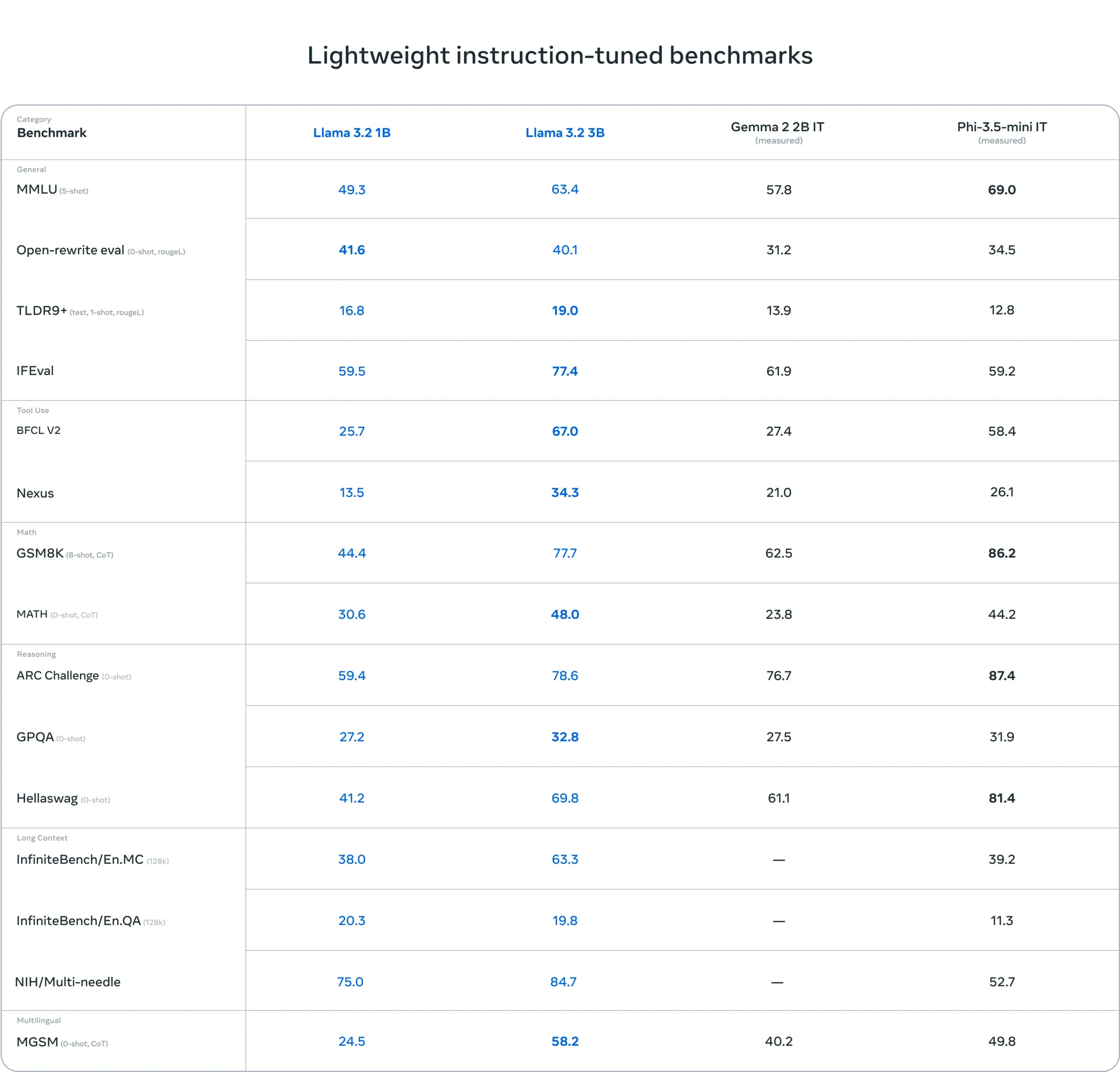

Llama 3.2 Benchmarks

6. Use Case Comparison:

| Use Case | Best Model | Why |

| Advanced Chatbots | Llama 3.1 | Better contextual understanding |

| Mobile AR Assistants | Llama 3.2 | Optimized for mobile device inference |

| On-device NLP Tasks | Llama 3.2 | Low VRAM, fast response |

| Server-based QA Apps | Llama 3.1 | More tokens, deeper comprehension |

| Code Generation | Llama 3.1 | Higher Human Eval score |

7. Hardware & Deployment Efficiency:

Llama 3.2 is Meta’s first LLM truly built for hardware-constrained environments, including:

- Meta Ray-Ban smart glasses

- Mobile phones

- AR/VR headsets

It runs efficiently on Snapdragon and ARM-based chips, a leap from GPU-heavy models.

In contrast, Llama 3.1 requires server-class GPUs like A100, H100, or cloud-based deployment for real-time responsiveness.

8. Model Licensing & Availability

Both models are released under Meta’s research license, which restricts commercial use without explicit permission.

- Llama 3.1 is available on Hugging Face, AWS, Azure.

- Llama 3.2 will be embedded in Meta devices and selectively available to developers.

9. Which Llama Model Should You Use?

- Use Llama 3.1 if:

- You are building research apps

- Your task requires deep reasoning or coding

- You want best-in-class open-source benchmarks

- Use Llama 3.2 if:

- You are deploying to mobile/AR devices

- You need real-time inference under 6GB VRAM

- You are integrating Meta AI Assistant

10. Final Verdict

Conclusion and Recommendations

In conclusion, both Llama 3.1 and Llama 3.2 are powerful models, each suited for different tasks. Llama 3.1 provides a strong foundation for traditional natural language processing tasks such as text generation, translation, and summarization. Its optimized transformer architecture ensures efficiency and scalability for text-only applications.

Llama 3.2, however, builds on Llama 3.1 by adding multimodal capabilities through a vision adapter. This allows Llama 3.2 to process and understand both text and images, making it ideal for tasks like image captioning, visual question answering, and other multimodal applications. The vision adapter integrates image data into the language model via cross-attention layers, enhancing its versatility.

Ultimately, the choice between Llama 3.1 and Llama 3.2 depends on your specific needs. If your work focuses on text-based tasks, Llama 3.1 is a reliable, efficient choice. However, if you need multimodal capabilities for image and text processing, Llama 3.2 offers an advanced solution. Both models are fine-tuned to ensure helpfulness and safety, making them valuable tools for a wide range of AI applications.