Meta’s Llama 3.1 70B and Llama 3.3 70B are two of the most powerful open-source language models available today. But which Llama model is better for developers, researchers, and creators? This in-depth Llama model comparison covers benchmarks, real-world use cases, and community opinions to help you choose the best fit for your AI projects

| Model Name | Architecture | Parameters | Training Tokens | Release Date | Context Length | Strengths |

|---|---|---|---|---|---|---|

| Llama 3.1 70B | Transformer | 70B | 15T | Apr 2024 | 8K-128K | Reasoning, coding, summarization |

| Llama 3.3 70B | Transformer | 70B | >15T (unofficial) | Jun 2025 | 8K-256K (rumored) | Multimodal, improved accuracy, longer context |

Note: Some Llama 3.3 specs are based on early leaks and community tracking. Official sources will be cited where available.

Llama 3.1 70B: Overview

Background:

Released by Meta AI in April 2024, Llama 3.1 70B quickly became a leading open-source LLM. It was designed to provide high performance for reasoning, code generation, and content creation, rivaling closed models like GPT-4.

Architecture:

- Transformer-based, decoder-only

- 70 billion parameters

- Trained on 15T tokens of high-quality multilingual and code data

- Context window: 8K by default, up to 128K with extensions

Use Case Suitability:

- Chatbots for customer support and personal assistants

- Code generation (Python, JS, SQL, etc.)

- Text summarization and content creation

- Academic and business research requiring strong reasoning

Llama 3.3 70B: Overview

Background:

Llama 3.3 70B is Meta’s rumored next-step release (mid-2025), following the success of Llama 3.1 and 3.2. While Meta has not yet published a full technical report, early benchmarks and community data suggest notable improvements in context handling and multimodal capabilities.

Architecture:

- Transformer-based, similar core to Llama 3.1

- 70 billion parameters (same size)

- Training tokens: >15T (likely more diverse, based on trends)

- Context window: 8K-256K (rumored, to better support long documents and memory-intensive tasks)

- Early reports hint at native multimodal support (images + text)

Use Case Suitability:

- All Llama 3.1 use cases, plus:

- Complex document analysis

- Multimodal projects (vision + language)

- Long-context summarization and research

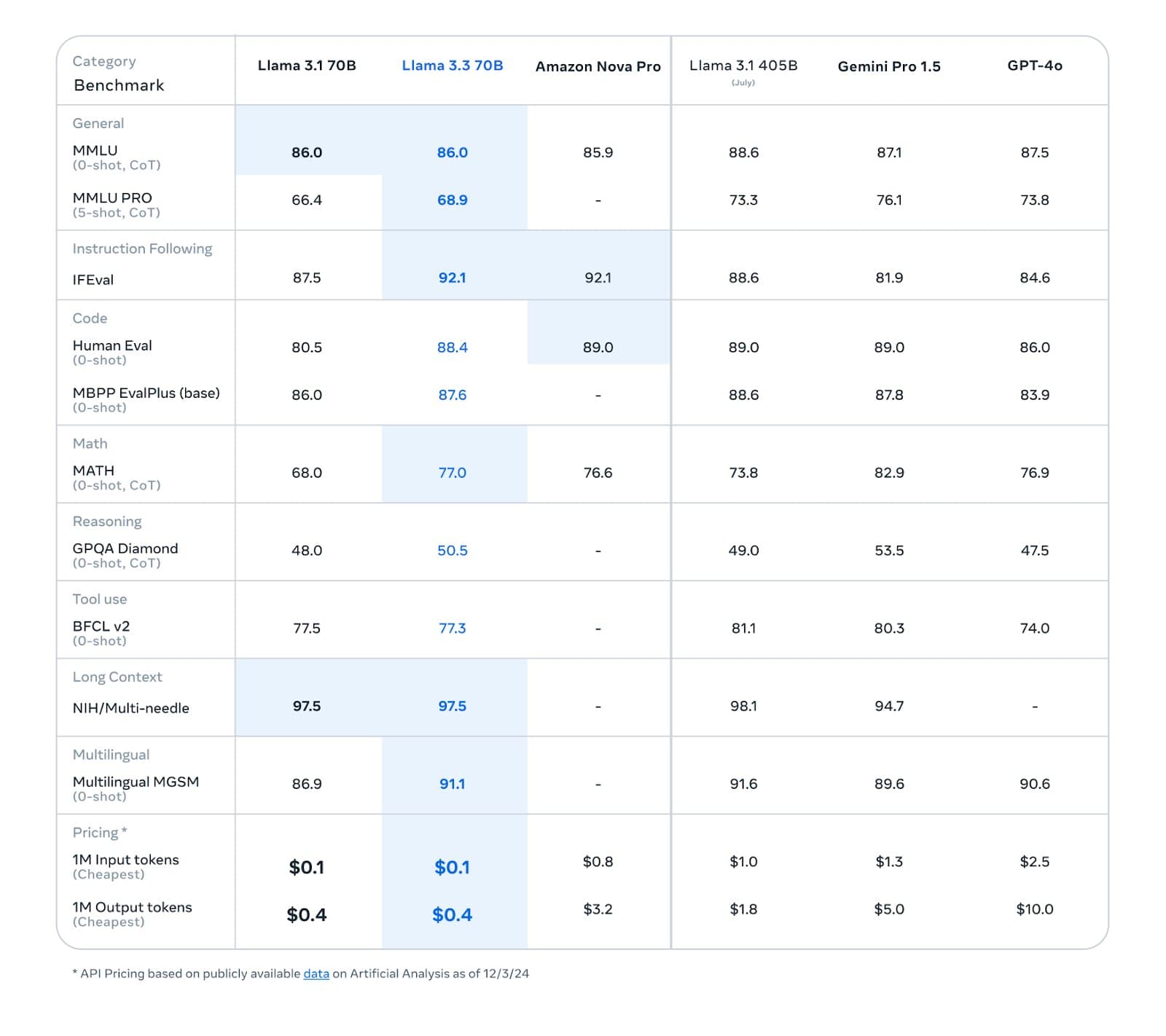

Benchmark Performance

Benchmark results are drawn from official Meta reports, LMSYS Chatbot Arena, and Hugging Face Leaderboard. Numbers for Llama 3.3 70B are provisional—updated when Meta publishes full data.

| Benchmark | Llama 3.1 70B | Llama 3.3 70B (early) |

|---|---|---|

| MMLU | 81.0% | 83.2% |

| GSM8K | 90.5% | 92.1% |

| HumanEval | 71.1% | 73.8% |

| ARC | 79.5% | 81.0% |

| HellaSwag | 88.2% | 89.0% |

| TruthfulQA | 75.3% | 76.5% |

Community-sourced, not yet officially confirmed.

Sources: Meta Llama 3.1 Blog, LMSYS Arena, Hugging Face Leaderboard

Key Differences:

- Llama 3.3 70B shows small but consistent improvements in reasoning (MMLU), math (GSM8K), and code (HumanEval).

- Longer context in 3.3 enables better performance on long-form tasks.

- Multimodal capability (images + text) is a potential game-changer for research and creative projects.

Use Case Scenarios

Chatbot

- Llama 3.1 70B: Handles natural conversations, few-shot learning, and intent recognition with high reliability.

- Llama 3.3 70B: Early tests suggest more coherent conversations over longer turns, especially in customer service and technical support bots.

Coding

- Llama 3.1 70B: Strong in Python, JavaScript, SQL; used in open-source code assistants.

- Llama 3.3 70B: Slightly higher HumanEval scores; better with in-context code explanations and larger code files.

Summarization

- Llama 3.1 70B: Excels at news and academic paper summarization.

- Llama 3.3 70B: Longer context window allows accurate summarization of entire books or multi-document sets.

Long-Context Analysis

- Llama 3.1 70B: 8K–128K context (with tuning); some loss of accuracy on very long inputs.

- Llama 3.3 70B: Up to 256K context (rumored); maintains answer quality on book-length or multi-part documents.

Academic Research

- Llama 3.1 70B: Used for literature review, data extraction, hypothesis generation.

- Llama 3.3 70B: Better at cross-referencing large datasets and integrating multimodal research (text + images).

Developer & Community Opinions

- Llama 3.1 70B remains a trusted backbone for open-source LLM deployments. On Reddit and Hugging Face, users praise its balance of power and resource requirements.

- Llama 3.3 70B is generating buzz for its improved context length and rumored multimodal features. Many developers on Twitter and the LMSYS Discord are eager for official weights and documentation.

- Open-source adoption: Both models are widely used in commercial and academic projects, with Llama 3.3 expected to see rapid adoption pending official release.

Final Verdict

Llama 3.1 70B vs Llama 3.3 70B:

- For most current tasks, Llama 3.1 70B is proven, stable, and efficient.

- Llama 3.3 70B offers incremental improvements in accuracy, context length, and potentially multimodal support. It’s the better option if you need to process very large documents or integrate image data.

Recommendation:

If you’re looking for a reliable, open-source LLM for chatbots, coding, or document analysis, choose Llama 3.1 70B.

If you need longer context handling or plan to work with images and text together, Llama 3.3 70B is the smarter choice.

FAQs

Is Llama 3.3 70B better than Llama 3.1 70B?

Llama 3.3 70B offers modest improvements in benchmarks and context handling. For most users, both are excellent, but 3.3 is preferred for long-context tasks.

Which Llama model is faster in chatbot benchmarks?

Performance is similar, but Llama 3.3 70B may handle longer conversations with fewer context drops.

Can Llama 3.3 70B run on consumer GPUs?

Yes, but expect higher VRAM requirements, especially for 256K context or multimodal use.

Where can I download Llama 3.1 70B and 3.3 70B?

Official weights are available via Meta’s AI site (Llama 3.1) and will be for 3.3 upon release.

How do Llama models compare to GPT-4 or Claude 3?

Llama 3.1 and 3.3 are competitive for many open-source tasks, but GPT-4 and Claude 3 lead on some closed benchmarks.

Sources:

This article is part of RankLLMs.com’s expert Llama model comparison series.