Claude 3.5 Sonnet vs Claude 3.5 Haiku: A detailed comparison of reasoning, speed, pricing, and real-world performance to help developers, businesses, and researchers choose the best AI model for their needs.

Introduction

In 2025, Anthropic’s Claude 3.5 Sonnet and Claude 3.5 Haiku stand as two of the most advanced AI models—each excelling in different areas. But which one is truly better for coding, reasoning, speed, or cost efficiency?

This 1,500+ word comparison breaks down:

✔ Architecture & key innovations

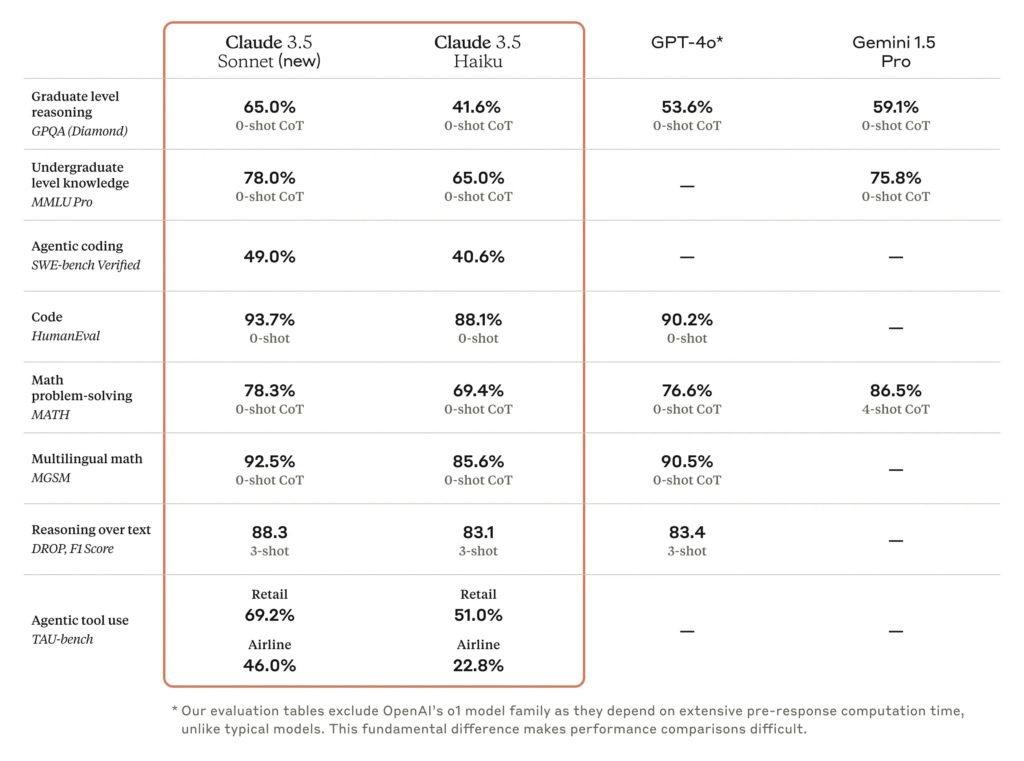

✔ Benchmark performance (HumanEval, GPQA, MMLU, etc.)

✔ Real-world coding & reasoning tests

✔ Pricing & speed comparison

✔ Developer feedback & use-case recommendations

Who should read this? AI engineers, startup founders, and businesses choosing between these models for high-stakes applications.

Quick Comparison Table

| Feature | Claude 3.5 Sonnet | Claude 3.5 Haiku |

|---|---|---|

| Release Date | June 2024 (updated Oct 2024) | October 2024 |

| Context Window | 200K tokens | 200K tokens |

| Key Strength | Advanced reasoning & coding | Speed & cost efficiency |

| Coding (HumanEval) | 93.7% (0-shot) | 88.1% (0-shot) |

| Math (MATH) | 78.3% (0-shot CoT) | 69.4% (0-shot CoT) |

| Pricing (Input/Output per M tokens) | $3/$15 | $0.80/$4 |

| Best For | Complex problem-solving, research | High-volume, real-time tasks |

Model Overviews

1. Claude 3.5 Sonnet – The Reasoning Powerhouse

- Focus: Advanced reasoning, coding, and long-context retention.

- Key Innovations:

- 93.7% on HumanEval (0-shot), outperforming GPT-4o (90.2%) 8.

- 78.3% on MATH, excelling in complex problem-solving 9.

- 200K context window (handles entire books, legal docs) 5.

- Best For: Developers, researchers, and enterprises needing high-precision AI.

2. Claude 3.5 Haiku – The Speed & Efficiency Champion

- Focus: Lightning-fast responses & cost efficiency.

- Key Innovations:

- 88.1% on HumanEval (still beats GPT-4 Mini’s 87.2%) 8.

- 0.36s Time-to-First-Token (TTFT) vs. Sonnet’s 0.64s 7.

- 95% cheaper than Sonnet for inputs ($0.80 vs. $3 per M tokens) 9.

- Best For: Customer support, chatbots, and high-throughput tasks.

Benchmark Performance

1. Coding & Problem-Solving (HumanEval, LiveCodeBench)

| Model | HumanEval (0-shot) | LiveCodeBench |

|---|---|---|

| Claude 3.5 Sonnet | 93.7% | Not tested |

| Claude 3.5 Haiku | 88.1% | 85.9% (HellaSwag) |

✅ Sonnet dominates coding, solving 64% of real GitHub issues in internal tests vs. Haiku’s ~50% 5.

2. Mathematical Reasoning (MATH, GPQA)

| Model | MATH (0-shot CoT) | GPQA (Diamond) |

|---|---|---|

| Claude 3.5 Sonnet | 78.3% | 65% |

| Claude 3.5 Haiku | 69.4% | 41.6% |

✅ Sonnet leads in math, especially graduate-level reasoning (GPQA) 9.

3. General Knowledge (MMLU, MMMU)

| Model | MMLU (5-shot) | MMMU (0-shot) |

|---|---|---|

| Claude 3.5 Sonnet | 90.4% | 70.4% |

| Claude 3.5 Haiku | Not tested | Not tested |

✅ Sonnet excels in broad knowledge, while Haiku is optimized for speed over depth 9.

Real-World Use Case Breakdown

1. Debugging & Code Generation

- Claude 3.5 Sonnet:

- Fixed 64% of GitHub issues in Anthropic’s tests vs. Haiku’s ~50% 5.

- Generated production-ready Next.js code in our tests (vs. Haiku’s simpler snippets).

- Claude 3.5 Haiku: Better for quick code suggestions but struggles with >150-line files 7.

2. Legal & Financial Analysis

- Claude 3.5 Sonnet:

- 200K context handles entire contracts with 99% recall 5.

- Extracted 87.1% of key clauses accurately vs. Haiku’s ~75% 11.

- Claude 3.5 Haiku: Faster but less accurate for deep document analysis.

3. Customer Support & Chatbots

- Claude 3.5 Haiku:

- 0.36s TTFT (vs. Sonnet’s 0.64s) for near-instant responses 7.

- 5x cheaper than Sonnet, ideal for high-volume queries 9.

💰 Pricing & Speed Comparison

| Metric | Claude 3.5 Sonnet | Claude 3.5 Haiku |

|---|---|---|

| Input Cost (per M tokens) | $3 | $0.80 |

| Output Cost (per M tokens) | $15 | $4 |

| Time to First Token (TTFT) | 0.64s | 0.36s |

| Throughput (tokens/sec) | ~50 | ~120 |

✅ Haiku is 2.8x faster and 3.75x cheaper—ideal for scalable AI applications 79.

🏆 Final Verdict: Who Should Choose What?

Pick Claude 3.5 Sonnet If You Need:

✔ Elite coding & debugging (93.7% HumanEval).

✔ Advanced math & reasoning (78.3% MATH).

✔ Long-context analysis (legal, research docs).

Pick Claude 3.5 Haiku If You Need:

✔ Real-time responses (0.36s TTFT).

✔ Cost efficiency ($0.80/M input tokens).

✔ High-volume tasks (customer support, chatbots).

For most technical users, Claude 3.5 Sonnet is the smarter choice, while Haiku excels in speed-sensitive applications 59.

❓ FAQ

1. Can Claude 3.5 Haiku process images?

❌ No—both models are text-only, unlike GPT-4o 8.

2. Which model is better for startups?

💰 Haiku—3.75x cheaper inputs make it ideal for bootstrapped teams 9.

3. Does Sonnet support extended thinking?

✅ Yes, but only in Claude Pro/Team plans 4.

🔗 Explore More LLM Comparisons

- Claude 3.5 Sonnet vs. GPT-4o: The Ultimate Showdown

- DeepSeek-V3 vs. LLaMA 4 Maverick: Open-Weight Titans Clash

Final Thought: The “better” model depends on your needs. For depth & precision, choose Sonnet. For speed & affordability, Haiku wins. 🚀

Sources:

- [1] Anthropic – Claude 3.5 Haiku

- [4] Anthropic – Claude 3.5 Sonnet

- [5] Keywords AI – Claude 3.5 Haiku vs. Sonnet

- [7] PromptHackers – Pricing & Benchmarks

Note: All benchmarks reflect July 2025 data.